This is the second post of a two-parter. Please read part 1 for context and additional information. 3rd Party Libraries As mentioned in part 1, Scala is not binary backwards-compatible between major releases. Although code compiled with 2.10 may work properly with libraries compiled against 2.9.x, this is hardly recommended and may result in a lot of subtle error conditions at runtime that are hard to track down. As a result, the recommended practice is to upgrade all Scala dependencies to versions compiled against 2.10. Fortunately the Scala ecosystem is very active, so most common libraries had 2.10 builds on announcement day, but you are still likely to encounter libraries that need to be upgraded because: - They're lagging behind the Scala release schedule and simply haven't released 2.10-compatible binaries (fortunately, this seems to be extremely rare; in practice all our dependencies were otherwise resolved);

- The specific version you're using does not have a 2.10-compatible release and likely never will (Squeryl 0.9.5-2);

- A 2.10-compatible official release is out but hasn't yet been published to the central repository (Scalatra 2.0.5).

My experience shows that with a bit of dilligence, practically all our (myriad) dependencies were fairly easy to migrate to 2.10. In most cases this simply entails switching to the appropriate artifact ID suffix (_2.10 instead of _2.9.2, see part 1 for associated rant), in other cases I had to upgrade to a later official release and in rare cases I had to dig in and replace a dependency with an appropriate fork or snapshot version. Here is a list of migration notes for some of our dependencies: | Library | Previous | New version | Comments | | Scala-Time | 0.6 | nscala-time 0.2 | The original library appears to be abandoned and was forked on GitHub.

The package changed from org.scala-tools.time to com.github.nscala-time.time | | ScalaMock | 2.4 | 3.0.1 | The 3.x series runs natively on Scala 2.10 without proxy factories and the like. Syntax is a bit odd but works like a charm.

The ScalaTest integration is compiled against ScalaTest 2.0-M5b, which forced us to upgrade. | | ScalaTest | 1.8 | 2.0-M5b | Minor API changes: Spec→FunSpec, parameters changed in Suite.run() | | Squeryl | 0.9.5-2 | 0.9.5-6 | | | Argot | 0.4 | 1.0.0 | | | Scala I/O | 0.4.1-seq | 0.4.2 | | | Lift JSON (+ext) | 2.4 | 2.5-M4 | | | Lift Facebook | 2.4 | 2.5-SNAPSHOT | Had to exclude net.liftweb:lift-webkit_2.10 to avoid dependency conflicts | | Akka | 2.0.4 | 2.1 | Scheduling now requires an implicit ExecutionContext. See migration guide for details. | | Scalatra | 2.0.4 | 2.0.5-SNAPSHOT | Official 2.0.5 is out, but has not yet shown up on the central repository, so I ended up going with the (frozen) 2.0.5 snapshot artifacts | | Scalatra | 2.1.1 | 2.2.0 | scalatra-akka module has been folded back into the core artifact as of 2.2.0. | | Graph for Scala | 1.5.1 | 1.6.1 | | Scala Library Changes The Scala class library itself has a number of changes you ought to be aware of before migrating to 2.10. The really big change is that Scala actors are deprecated in favor of Akka. You can still use them by importing the scala-actors artifact from the Scala 2.10 distribution, but it is recommended to migrate fully to the new actor system as this is also likely to be obsoleted by 2.10.1. The gentle folk at Typesafe have provided a very comprehensive migration guide to assist your efforts. The less prevasive API changes we ran into include: - List.elements is deprecated in favor of List.iterator;

- TraversableOnce.toIndexedSeq no longer takes a type argument. This was actually quite pervasive in our codebase, causing plenty of compilation errors, and is easily worked around by removing the type parameter (which is extraneous to begin with);

- Scala actors' Actor.receive method is now public (previously protected). This had to be rectified in pretty much all of our existing actors by removing the protected modifer;

- Occasional subtle API changes requiring minor code fixes. For example, see this question on StackOverflow.

Summary

Opinions differ among members of our team - some predicted that the migration process will be much more complex whereas personally, given the relatively high level of maturity I've come to depend on in the 2.9 series, the migration process actually ended up being significantly harder than I anticipated. Particularly disconcerting were the occasional compiler failures which took a lot of time to track down. Practically speaking, though, the whole migration process took less than 3 days (including documentation), did not involve additional teammates, and all problems were either resolved or worked around rather quickly. The Typesafe compiler team has been very helpful in analyzing and resolving the single bona-fide compiler bug we've run into, and the community as a whole (on Stack Overflow, Google Groups and elsewhere) was also extremely supportive and helpful. On the whole, am I happy with the process? So-so. There is nothing here an experienced Scala developer should have serious trouble with, but it will take a lot more stability and predictability for Scala to gain mainstream acceptance in the industry, and that includes a much easier and more robust migration path to upcoming releases (yes, that includes migrating from 2.10 to 2.11 when it comes along). That being said, Scala has been a terrific language to work with in the last couple of years, and the new features in 2.10 (particularly reflection, macros and string interpolation) should make this an extremely worthwhile upgrade. We still have a lot of regression testing to do on the new version, and if anything interesting pops up I'll be sure to post about it separately (or bitch about it on Twitter...)

I just spent a few days migrating our codebase from Scala 2.9.2 to Scala 2.10. A quick search found very little in the way of migration guides and studies, so it seemed appropriate to document my experiences and offer what tips I managed to collect on the way. General Observations This process should not be undertaken lightly, and its implementation inevitably depends on your resources and team composition. The group (or person) charged with migrating the codebase should, at the very least, consist of an experienced Scala developer not afraid to get his or her hands dirty. The migration is not an entirely smooth process - there are plenty of (fortunately fairly minor) breaking changes in the Scala APIs, and since Scala does not yet feature binary backwards compatibility between major versions (2.9→2.10→2.11...), so expect some inevitable library upgrades and the potential complexities inherent in any such update. The specific issues I encountered are documented in their respective sections below. Unfortunately there is not much I can offer in the way of preparation; these are the obvious steps: - Familiarize yourself with Scala 2.10. Make sure you know what you’re getting in exchange for investing time and effort on the migration process and early-bird issues;

- Work in an isolated branch and pull changes from the master branch often;

- Commit early, commit often. Try to keep your commits small and well-documented, so you have good revert checkpoints for when things go wrong;

- Keep the affected code to a minimum. The fewer changes, the easier it will be to isolate problems down the road.

If you’ve read so far and still can’t wait for 2.10.1, I hope the next few sections will save you some time and pain. Toolchain To start things off I switched the build to use Scala 2.10. Our project runs on Maven in lieu of SBT (topic for another post), and we use David Bernard’s excellent scala-maven-plugin, which deduces the Scala version from the scala-library dependency. The convention for the Scala version suffix has changed between 2.9 and 2.10: for example, argot2.9.2 is now argot2.10 - no revision for the 2.10 series. This rendered the suffix macro we employ useless, because annoyingly the Scala librariesthemselves (e.g. scalap or scala-compiler) actually use the full version number, so we ended up needing two macros (a “raw” Scala version and a suffix). Fortunately, at least as far as Maven is concerned the rest was smooth sailing. I next turned my attention to IntelliJ IDEA - I wanted to make sure an existing workspace can be reused with the migrated codebase, otherwise my entire team will have to undergo the inconvenience of starting from scratch on a clean working copy. Fortunately the process turned out to be quite painless (on IDEA 12.0.3 with Scala plugin build 0.7.121), with the following caveats: - Code analysis appears to miss some cases of stricter compilation compared to 2.9.x (see below);

- Code analysis occasionally identifies relative import statements as erroneous (e.g. import util.Random);

- Project FSC settings had to be manually changed to use the 2.10 compiler bundle (it remained on the default 2.9.2). This proved to be a moot point, because:

- The much-touted IDEA external build mode finally works consistently for the first time (and it uses the significantly better SBT compiler)!

- On the negative side, IDEA does not seem to handle compiler failures (as opposed to compilation errors) gracefully, missing a lot of detail in the output. As I ran into quite a few of these (details below), I ended up doing most of the compilation tests with Maven directly.

Beyond setting up a build job for the new branch, Jenkins posed no issues.

To summarize, from a toolchain standpoint, this was actually a fairly smooth process. Language/Compiler I was surprised and somewhat disheartened to find that, after some initial compilation attempts, it became evident that I missed every single mark in my code risk predictions. Where I expected most language-level headaches I encountered none, and seemingly simple and risk-free code ended up taking most of the time working on this process. First off, the Scala 2.10 compiler is much stricter than the earlier 2.9.x series. It has a few new heuristics that ended up triggering very often on our codebase; some are simply the result of messy code or design, but most are new best practices adopted by the compiler team at Typesafe: - “warning: This catches all Throwables. If this is really intended, use `case _ : Throwable` to clear this warning.”

We wrap-and-throw or swallow a lot of exceptions, and for brevity’s sake often use the shorter catch { case _ => }. A catch block is analogous to a partial function from Throwable, and there are undoubtedly cases where the distinction matters. We’ll clean these up iteratively by qualifying that we’re only catching Exceptions.

- “non-variable type argument B[T] in type A[B[T]] is unchecked since it is eliminated by erasure”

The 2.10 compiler is actually quite helpful in warning you when generic code may not behave as expected. This is just one example of such a warning.

- “error: overloaded method methodName needs result type”

The 2.10 compiler requires you to specify result types on most (all?) types of method overloading. Not much work here. - Case classes can no longer derive from other case classes, which makes a whole lot of sense if you consider the typesystem; 2.9.x had a whole variety of bugs and edge-cases relating to case class inheritance and companion objects, including some unwieldy constructor syntax. 2.10 simply does away with the complexity and potential ambiguity, which will break existing code. Since such class hierarchies are inherently problematic, I see this as an opportunity to refactor them properly; extractor objects were often useful for that task.

- In one case we ran into stricter cyclic dependency analysis on the part of 2.10 - an object extended a class and passed a member of another object as a constructor parameter. The second object referenced the first, which compiled fine under 2.9.2 but resulted in a cyclic dependency error with 2.10. As the original design was hard to understand I refactored the code to be a bit simpler, which resolved the problem satisfactorily.

- Manifests are deprecated in favor of TypeTags and the new reflection library built into 2.10 (certainly one if its most anticipated and celebrated features), but are still functional. We haven’t migrated to the new APIs yet, and that process will likely deserve a post all on its own; read this excellent answer on Stack Overflow for an introduction to TypeTags.

Beyond these and the occasional minor syntax change, the 2.10 compiler proved a somewhat difficult beast to tame: - Ran into crazily-detailed compiler failures that clearly (though long-windedly) indicated that I need to manually add scala-reflect to the classpath;

- Missing or conflicting dependencies, typically due to mishandling POMs, resulted in what I ended up dubbing the Spontaneous Compiler Combustion phenomenon: a cryptic compilation failure, complete with what appears to be an AST dump and full-blown debug information. Tracking the occasional familiar type name in the compiler log can be helpful in tracking down the dependency at fault (this was the case in all but one of the occurrences), but the error itself is completely inscrutable.

- The one case was, unfortunately, a proper compiler bug logged as SI-7109, which has to do with consuming a package-protected (or -private) trait/class from another trait/class with the same accessibility. Jason Zaugg of Typesafe (@retronym) was extremely helpful in analysing the compiler output and producing a reproduction case, which I haven’t been able to do on my own. Until a fix is included and released, I’ve worked around this by temporarily commenting out the problematic qualifiers.

- Update (2013-02-12): Ran into another compiler bug that causes a runtime ClassFormatError. We've managed to identify, reproduce and work around the problem; see SI-7120 on the Typesafe JIRA.

Lastly, it’s worth noting that the 2.10 compiler is significantly heavier than the older 2.9.x series: it appears to require 50-100% more permgen space, and compile times are up x2 on average and up to x10 on a clean, fresh build. This seems consistent on both my laptop and the build server, which use different CPUs and OS. A quick check (top and jstat -gcutil) showed the compiler process to be single-threaded and CPU-bound, as it consistently utilizes 100% of a single core. GC activity was low to the point of negligible, so it appears the new compiler is actually a step back in terms of compilation throughput. I hope subsequent 2.10.x releases focus primarily on compilation stability and performance. That’s it for today; next post will be up in a day or two is up and focuses on the Scala library, dependencies and miscellanea.

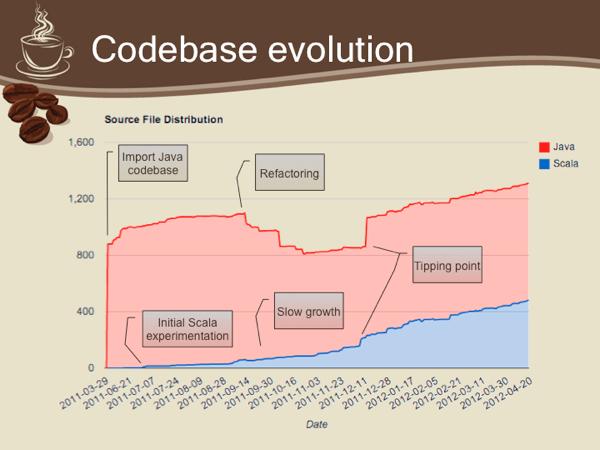

This post attempts to summarize and clarify a lecture given at the Botzia (Israeli Java user group) meeting on May 3rd, 2012. You can view the presentation in its entirety on SlideShare:

What is Scala?

Scala is a modern, statically-typed language designed to run on the Java platform. It's commonly perceived as an "evolutionary Java" and with good reason: Scala adds powerful features while significantly reducing Java's verbosity. It is a highly practical language in that it offers a very straightforward migration path for developers on existing platforms: Java developers will feel right at home with Scala, and a .NET developers will not have to give up on a lot of the advantages C# has over Java.

For organizations with a preexisting codebase Scala offers an excellent balance between power and feature set, as well as perfect interoperability with existing Java code: although you certainly don't have to, you can freely use familiar libraries without a hitch, call into existing code and, for the most part, use your existing tool-chain with little or no impact.

Why did we pick Scala?

As mentioned above, Scala offers a very compelling migration path from an existing Java codebase. When we were setting up the new R&D center at newBrandAnalytics, we were already serving paying customers via a complex codebase. A complete rewrite was out of the question as we had contractual obligations, and we had to keep improving the existing product in order to maintain the competitive edge; it became very clear that we needed to maintain the existing codebase and refactor it incrementally.

An additional data point was that our core team members were highly experienced with Java development and quite comfortable with the platform; we also had a distinct preference for statically typed languages, which ruled several alternatives (particularly Clojure) right out. Scala seemed like a great compromise in that it allowed us to maintain and extend the existing codebase while enjoying advanced language features and improved veracity at little or no risk to the product itself.

How should I "sell" Scala to my boss?

"Safe" choice with proven track record: Scala is not an entirely new language, and has reached a maturity tipping point at 2.8; over the last two years the language has been gaining significant momentum, and has a number of strong advocates and success stories, including Twitter, Foursquare, LinkedIn and a whole host of others.

Scala as a better Java: You don't have to go all-in on Scala's advanced feature set, but can instead think of Scala as an improved Java with type inference, closures, advanced collection framework, traits and pattern matching; these features alone will increase your developers' happiness by an order of magnitude, and productivity will see a corresponding improvement.

Less code ⇒ less bugs: Scala code is far more concise than corresponding Java code, an helps the developer focus on the "what" instead of the "how". The resulting code is usually much shorter, clearer and simpler, which in turn helps lower bug count.

Helps hire better engineers: Great engineers not only love working with the latest technologies, but also love practical, incremental improvements to well-respected technologies. Merely saying "we use Scala" will help attract the sort of seasoned, reliable and professional engineers that you most want to hire.

Where does Scala put you at risk?

Learning curve: Although Scala largely derives from Java, it's a whole new language with a lot of features that takes time to master. Don't expect your developers to be productive from day one; instead, provide opportunities for experimentation and encourage iterative development (and yes, give your developers time and mandate to throw away badly written early code and redo their work if necessary). There is actually a lot of material on Scala and an active and vibrant community; specifically, your developers can easily find help (and answers) on StackOverflow.

Rough edges: Scala is fairly new, and consequently a lot of things that you take for granted with Java are not as mature or robust with Scala. IDE support is a moving target: Eclipse and IntelliJ IDEA both have actively developed Scala plugins and both have occasional issues (spurious error highlighting, lackluster performance, wonky debuggers). The common build tools support Scala, but don't play as nicely; for example, only the native build tool (sbt) support incremental compilation. Even the compiler itself is not entirely bug-free, although these are getting very rare indeed. Bottom line: expect occasional problems, and be patient while working around them; even better, encourage your engineers to participate in the community, file bugs and even offer patches where possible.

Production characteristics: While it runs on the JVM, Scala is not Java; there are subtle differences that you should be aware of when maintaining large-scale or highly-available software. Scala generates a great deal of synthetic code, which puts additional pressure on the PermGen space; synthetic stack frames may also exhibit significantly increased stack usage. Scala also creates a lot of intermediate objects at runtime, which results in added eden generation churn. You may want to profile your applications and tune the GC accordingly.

What's the bottom line?

Scala is fantastic. Our team at newBrandAnalytics is remarkably happier with Scala despite the occasional hitches, and I doubt any of us will consider going back to Java given the option. As long as you keep in mind that on the bleeding edge you may get cut, I definitely recommend taking the plunge, with contractors and die-hard traditional enterprises the possible exceptions.

(Cross-posted on the Delver Blog) A little while ago I posted a job opening for the application engineer position at Delver, and one of the replies caught my interest: “so it’s a DevOps position?” A Google search later and I was astounded to find what I tried to explain has since grown into a fully fledged industry trend. I’ve learned to be mistrustful of such trends; in my experience they tend to inflate and deflate regularly, and if you try to keep abreast of all the proposed improvements to the development process you’re going to drown in overhead. Still, a critical percentage of these trends have a valid rationale driving them: unit testing, concurrency constructs, event-driven application servers, RESTful interfaces – all of these have very solid theoretical and/or practical reasoning and have had significant impact on the software development field. An additional commonality is: each took several years to gain acceptance in leading R&D teams, and several more to become ingrained methodology. The key word here is risk management, which is typically avoided or ignored altogether by the common developer. Don’t get me wrong: I come from a purely R&D background, and have shared that trait for years. What started me on a different line of thinking was the distinct pleasure of being woken up, once too often, by some poor NOC operator in the middle of the night, and getting mad enough to do something about it. Like most R&D personnel I was largely oblivious to the pains of deployment, availability, scaling, production troubleshooting and customer support, and had to learn my lessons the hard way. I believe most R&D people aren’t more minded of the pains inherent in each of these domains because of the simplest of reasons: they’ve never been challenged to do so. This is where “DevOps” comes in. An application engineer (app engineer or “devops guy” if you will) has two primary objectives: - Guide the R&D team in risk assessment. Having a software-savvy operations team member participating in design reviews is a huge boon to risk management; a better app engineer will want to participate in the design process itself, not necessarily designing the actual feature†, but even a quick overview of the proposed design is usually enough to provide operational feedback. This, without fail, results in a better design: clearer error-handling semantics, better monitoring and configuration facilities, high availability baked into the design, and induction into the deployment/administration toolchain concurrently with development efforts. This in turn leads to much better overall estimates and reduced failure rate.

- Keep the system up and running! This entails more than just observing the monitoring system (in my opinion, the less time you spend that way the less you are likely to have to, ad vitam aut culpam). The application engineer is in the relatively unique position of being both the consumer and producer of his or her own tools; this is where the wheat is separated from the chaff: a great app engineer will forever strive to improve and automate every nonfunctional aspect of the system, diligently working towards that asymptotic 100% uptime‡. DevOps personnel are the go-to people for getting systems off the ground; they’ll sketch the solution out, provide short- and long-term plans for deployment, monitoring and administration solutions both system-wide and component-specific. They’ll devise automatic tools to identify problems and anomalies, they’ll work ever-more-specific endpoints into their monitoring system, and they’ll be happy doing it because contrary to nearly any other position in the industry their interests and the business’s inherently converge.

Both DevOps and management would like nothing more than a clean, orderly universe in which systems do not fail, no data is ever lost and the system performs optimally on as little hardware as possible. Management’s business is budget and revenue; app engineers simply do not want to be woken up in the middle of the night. Next up: Growing a DevOps organization, stay tuned! † While not mandatory by any means, some design cycles can significantly benefit from an operational perspective; examples include static content management for websites; high availability for various system components; and any subsystem with external dependencies. ‡ A DevOps position is inherently multidisciplinary; for example, R&D background can significantly assist in troubleshooting. design reviews and in rolling your own tools. Strong system analysis skills, however, may be even more important, as they enable the two most important functions of the application engineer: spotting subtle holes in the design phase, and under-fire troubleshooting (which often requires the elusive ability to rapidly - but accurately - jump to conclusions).

When a company is acquired by another, some sort of restructuring is inevitable. As Delver’s acquisition by Sears Holdings became reality, it was also obvious that significant changes were required to how we operate. The first and most pronounced of these changes was that our social (or socially-connected, if you’re picky) search engine, the first product of its kind – we have enough ego to kick ourselves hard now that Google’s version is out – was scrapped, and the entire team was put to work on a new product for Sears Holdings. This, of course, meant restructuring the R&D team. One of our tenants at Delver was that everything is open to interpretation, critique and improvement. As an R&D team we were always relentlessly self-improving; I believe my two years at Delver were perhaps the best I have ever experienced professionally. I’m happy to say that this approach still prevails under Sears Holdings, and we’ve taken the first few months under the new management for some serious introspection, trying to learn everything we can from the mistakes we made while still working under the Delver banner. I believe the organization has improved across the board with these sessions, resulting in significant improvements to everything from recruiting, HR and managerial processes to source control, configuration and release management. But as a developer I felt I was hitting a professional plateau. As the new product’s specs took shape I was initially meant to take charge of the search engine implementation, continuing my original position at Delver. After nearly two years of working on search it became obvious to me that it is a very broad and nontrivial domain, and that to do a good job I will have to truly specialize in search. While I knew I did not want to continue working on the search engine, I also knew that the other developer positions would not satisfy me. While the product was being specified I kept busy with tasks that were not directly related with the product itself: setting up an integration testing framework (not trivial with a system comprising both Java and .NET components, and which integrates a significant number of 3rd party products), defining various development processes like version and branch guidelines, and finally implementing a proper Java build system that still drives our builds today. The common ground here is that, for the most part, the greatest enjoyment was derived from doing stuff that’s “horizontal”, that crosses components and teams and sort of binds the entire development effort together. With this in mind I approached my bosses at Sears and, after prolonged discussions, we came up with the title of Application Engineer: An application engineer, in Sears parlance at any rate, bridges the gap between R&D and IT (or rather, the support, deployment and administrative teams). Essentially, where R&D (and QA) ends, the app engineer’s role begins: the app engineer is directly responsible for the smooth operation of the production system. This means that the app engineer must not only be fully versed in the system architecture and inner workings, but must also be an active participant in defining it. Wherever there is an overlap between R&D and IT is where you will find the app engineer: front-end server farms, logging and profiling requirements, log aggregation and reporting, system monitoring (which suddenly not only includes health, but applicative counters that must be correctly specified and monitored), deployment and troubleshooting processes etc. Having been assigned this role for the past few months I’ve reached the conclusion that an app engineer is a cross between IT-oriented system architect and system administrator, walking a fine line between a developer and a system adminstrator. I certainly hope I don’t fall off!

Download ant-intellij-tasks-1.0-b1.zip A great but oft-ignored feature of Visual Studio 2005 and up is the inherent consolidation of an important developer tool: the build system. With a Visual Studio solution you can simply run MSBuild and you get accurate, automated builds. This is an invaluable capability: continuous integration is ridiculously easy to set up, as are nightly builds and automated deployment tools. Since I started working for Delver (now Sears) I’ve been switching back and forth between C# (2.0 and later 3.0) and Java 1.6, and though the ecosystems share many similarities there are also several glaring differences. The first of these differences is that, in the Java world, it is perfectly acceptable – even traditional – to maintain a dual project structure, one using the IDE (usually Eclipse or IntelliJ IDEA) and one using one of the build tools (commonly Ant or Maven). The build scripts need to be continuously synchronized with the project structure, and output parity between the two separate build systems is almost unheard-of. Because I had been a complete Java newbie when I started, I had never had the time to really sit down and set up a continuous integration server for our Java codebase, a mistake I did not intent to repeat when Sears took over. The first item on my agenda was to do away with the dual project structure; we originally used Eclipse, so I built a custom Ant script (my first, actually) around ant4eclipse and managed to come up with a semi-satisfactory solution. This also gave us invaluable insight when it was time to revisit our IDE choice; the lackluster project structure offered by Eclipse, along with firm positive comments on IntelliJ IDEA from several team members, tipped the balance and led us to switch to the alternative IDE, while also creating the necessity for a revamped build system can that work on top of the IntelliJ IDEA project structure. Out of necessity, a project was born. ant-intellij-tasks is the result of several months of all-night itch-scratching on my part. While not directly affiliated with the company, we’ve been dogfooding the project at Sears for over a month now, and while there are certainly rough edges it finally seems stable enough for release! From the project website: ant-intellij-tasks is a self-contained build system for IntelliJ IDEA projects based around Apache Ant. In essence, ant-intellij-tasks comprises three components: - An Ant task library that can extract and resolve the IntelliJ IDEA project and module files (.ipr and .iml respectively), and provides a set of tasks and conditions around the project structure;

- A common build script which provides the four major build targets for modules: clean, build, test and package (see the quickstart guide);

- A master build script which extends these targets to the entire project.

The build system is designed to be extensible (e.g. by adding targets), customizable (e.g. by overriding a target's behavior for a specific module) and self contained in that it's a drop-in solution that should not require any significant modifications to the code base. This project is fully open source (distributed under an Apache license) and hosted at Google Code. Please report any bugs or issues on the project issue tracker. ant-intellij-tasks makes use of, and redistributes, the ant-contrib task library.

Apparently Java has quite a few known but practically undocumented issues with its handling of UNC paths under Windows. I’ve specifically encountered this bug albeit in a slightly different scenario: @Test

public void test() throws URISyntaxException {

final URI uri = new URI( "file://c:/temp/test/ham.and.eggs" );

new File( uri ); // IllegalArgumentException thrown here

} Apparently the two slashes after file: are misinterpreted as the authority part of the URI; this thread on StackOverflow may give a few starting points if want to delve deeper. It seems Java implements an older RFC for URIs which has slightly different tokenization rules.

At any rate, so far the only sensible solution I’ve managed to come with is to manually remove or add (depending on your tastes…) a slash:

/**

* Resolves the specified URI, and returns the file

* represented by the URI.

*

* @param uri The URI for which to return an absolute path.

* @return The {@link File} instance represented by the

* specified URI.

* @throws IllegalArgumentException <ul><li>The URI cannot

* be null.</li><li>Wrong URI scheme for path resolution;

* only file:// URIs are supported.</li></ul>

*/

public static File getFile( URI uri )

throws IllegalArgumentException {

if ( uri == null )

throw new IllegalArgumentException(

"The URI cannot be null." );

if ( !"file".equals( uri.getScheme() ) )

throw new IllegalArgumentException( "Wrong URI " +

"scheme for path resolution, expected \"file\" " +

"and got \"" + uri.getScheme() + "\"" );

// Workaround for the following bug:

// http://bugs.sun.com/bugdatabase/view_bug.do?bug_id=5086147

// Remove extra slashes after the scheme part.

if ( uri.getAuthority() != null )

try {

uri = new URI( uri.toString().replace(

"file://", "file:/" ) );

} catch ( URISyntaxException e ) {

throw new IllegalArgumentException( "The specified " +

"URI contains an authority, but could not be " +

"normalized.", e );

}

return new File( uri );

}

This is definitely a workaround, but according to newsgroup and forum posts these bugs have been around forever. If anyone has a more elegant solution I’d love to know.

It seems nothing to do with maintaining this website is as easy or as simple as it should be. Whenever I switch hosts it’s an uphill struggle to get the site up and running again; whenever I upgrade dasBlog to a newer version I have to learn a lot about how it works, how ASP.NET works, how IIS is configured etc. It’s enough to make me seriously consider hosting my blog elsewhere and/or moving to another blogging platform, but the truth is I love dasBlog so much I simply forget how complex and volatile it can be and have to go through the same frustrating process whenever something changes. The way I update my site is usually this: - Ensure I have an up-to-date local mirror of the website. dasBlog keeps all of its data in XML files, so backing up the website is simply a question of wget –-passive-ftp –m ftp://user:password@website.com; I have a daily scheduled task to take care of this.

- Copy the latest mirrored version to a working directory; set the directory up as an IIS website/virtual directory.

- Test the new working copy to make sure it works.

- Perform whatever modifications are required.

- Test again to make sure that the website works with multiple browsers (this time I tested with Chrome 2.0.170.0, Firefox 3.1 Beta 3 and IE 8)

- Upload the website over FTP using the FileZilla client. I always verify that the relevant configuration files and binaries are overwritten and nothing else.

This process generally allows me to test upgrades before uploading them to the “production” website, as well as provides an easy rollback path if something goes wrong. Thing is, something always goes wrong. In this case, although nothing’s changed in the site configuration I started getting SecurityExceptions just after the upgrade: Request for the permission of type 'System.Security.Permissions.SecurityPermission, mscorlib, Version=2.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089' failed. This is one of the least informative error messages I have ever seen. All it tells me is that a request for some permission is denied; it doesn’t say what permission was requested, nor by whom (the stack trace seemed to indicate the permission was asserted from within System.Diagnostics.Trace, which doesn’t make much sense). A quick web search brought me to this page, which deals specifically with installing dasBlog on a GoDaddy-hosted website. Because GoDaddy runs ASP.NET applications under a modified medium trust that allows file-system access only to the virtual directory hierarchy, the site recommends adding a virtual directory for each of dasBlog’s writable directories (content, siteconfig, logs); I tried this out and the problem was not resolved. At this point I was getting desperate, and was willing to try just about anything to get the site up and running again. Eventually I ran a diff between the site backup and the newly modified version, and found a new openidConsumerTrace.txt file in the site root. I’ve never seen that one before; where'd it come from? A quick search showed the following section in the web.config file: <system.diagnostics>

<assert assertuienabled="false"/>

<switches>

<add name="OpenID" value="4"/>

</switches>

<trace autoflush="true" indentsize="4">

<listeners>

<add name="fileLogger" type="System.Diagnostics.TextWriterTraceListener"

initializeData="openidConsumerTrace.txt" traceOutputOptions="None"/>

</listeners>

</trace>

</system.diagnostics>A-ha! So the OpenID activity trace log is written to the virtual root, which is not writable (I set the ACLs to only allow writes to the above three directories). I tried changing the trace file path to ~/logs/openidConsumerTrace.txt (which is a virtual directory and has the appropriate write ACL), but this did not resolve the problem. At this point I was ready to roll back to the previous version and work on switching to another (perhaps hosted) blogging platform, and in my despair I simply commented out the whole system.diagnostics section; oddly enough, this resolved the problem…

Now I know dasBlog is free and there’s little or no point complaining, so I hope this post helps someone handle the problem. And if anyone from the dasBlog team is reading this… please be a little more careful with undocumented dependencies?

Update (8 May 2009): Eli Ofek pointed out in the comments a little-known but effective tool called the Managed Stack Explorer. Although it features a basic GUI, it can effectively be a .NET equivalent of jstack if you add to the path; then it’s just a question of typing mse /s /p <pid>. It’s a little slower than jstack but worlds better than the alternative suggested below. I’ve been working mostly with Java over the last year, and the .NET code I write is usually limited to interface code between our .NET-based and Java-based components. A long while away from production-grade code on Windows means I need to brush up on my production debugging skills. On today’s menu: thread dumps, or per-thread stack traces if you will. With Java code (at least starting with Java 5) this is as easy as jstack <pid>; with .NET it turns out to be quite a bit more complicated: - Download and install the appropriate Debugging Tools for Windows version for your architecture (x86/x64/Itanium)

- If you need information about Windows function calls (e.g. you want to trace into kernel calls), download and install the appropriate symbols. This isn't strictly necessary if you just want a thread dump of your own code.

- If you need line numbers or any other detailed information, make sure to place your assemblies' PDB files where the debugger can find them (normally you just put them next to your actual assemblies).

- Start->Programs->Debugging Tools for Windows [x64]->windbg

- Attach the debugger to your running process using the menu

- Load the SOS extension with ".loadby sos mscorwks" for .NET 2.0 (".load sos" for .NET 1.0/1.1)

- Take a thread dump using "!eestack"

- Detach using ".detach"

Quite a bit of work for something as trivial as a thread dump. I hope .NET diagnostic and debugging tools improve with time (Process Explorer is definitely a step in the right direction).

Don’t ya think?

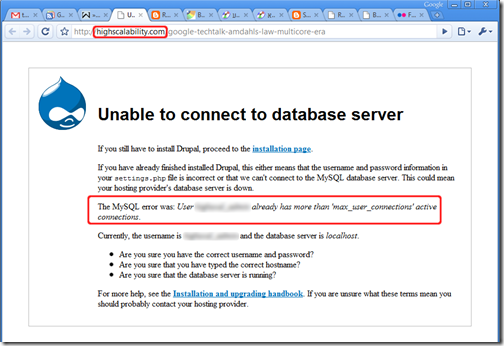

I was making modifications to one of our components, and running all of the unit tests revealed that all database-dependant integration tests were failing: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure Last packet sent to the server was 0 ms ago.

<snip> (cut for brevity’s sake)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2104)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:729)

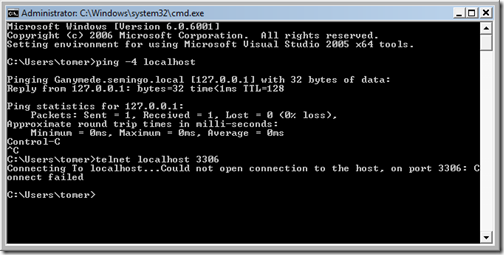

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:46) Strange error message, but as it turns out the inner exception was far more revealing: java.net.ConnectException: Connection refused: connect As can be expected, the local MySQL server was up and running, and I was able to connect with the command line tool as well as with SQLYog, so it was obviously not a problem with MySQL or the local firewall. Next up I tried to telnet to the appropriate port (the easiest way I know to check port-level connectivity) without success:

I next tried to connect to the loopback IP (127.0.0.1), and experienced a major WTF moment when the connection succeeded. I use Windows Server 2008 and, as it turns out, it supports IPv6 out of the box. localhost has a slightly different meaning under IPv6 (it maps to ::1), and as I understand it traditional IPv4 traffic is tunneled over the looback IPv6 connection; I’m not yet familiar enough with IPv6 to draw any conclusions on why the above shouldn’t work, but the bottom line is there are several ways of resolving the problem: - Edit your hosts file (it’s hidden under Windows Server 2008, but you should be able to Start->Run->notepad %SYSTEMROOT%\System32\Drivers\etc\hosts) and change the mapping for localhost from “::1 localhost” to “127.0.0.1 localhost”. This does resolve the problem, although I can’t say what impact this will have on IPv6-enabled applications.

- Set the TCP stack to prefer IPv4 to IPv6 when attempting to connect (it’s the reverse by default). According to this forum post, this entails setting the registry value HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\tcpip6\Parameters\DisabledComponents to the DWORD value 0x20.

- Disable IPv6 altogether for your network connection: remove the IPv6 protocol from your network connection component list. At this point in time IPv6 is still very rare so I doubt this will cause any significant issues, but YMMV.

For me, changing the hosts file was the quickest solution because it works and is easy to revert. I’ll have to keep a very careful eye on the behavior of my machine though.

If you've ever tried to develop using PHP on IIS7 (Vista), you'll find that errors in your script result in the default IIS7 "friendly" HTTP 500 error page, which is useless for debugging. This happens in both FastCGI and ISAPI modes. To save you hours of crawling through the 'net, the solution (found on this forum post) is very simple: - Start a command prompt;

- Copy-paste the following: %windir%\system32\inetsrv\appcmd.exe set config -Section:system.webServer/httpErrors -errorMode:Detailed

- Run iireset

- Enjoy.

Update: I was wrong, and private setters do not appear to work (strange, I’m sure I verified this in my test code, but it didn’t work in practice). See below. It’s been ages since I used XmlSerializer, or even written meaningful amounts of code in C# for that matter, which is why I was utterly stumped by this problem. Try running this code: [XmlRoot( "test" )]

public class Test

{

private readonly string _string1;

private readonly string _string2;

public Test( string string1, string string2 )

{

_string1 = string1;

_string2 = string2;

}

[XmlAttribute( "attr" )]

public string String1 { get { return _string1; } }

[XmlElement( "element" )]

public string String2 { get { return _string2; } }

}

/// ...

XmlSerializer xs = new XmlSerializer( typeof( Test ), "" );

XmlSerializerNamespaces xsn = new XmlSerializerNamespaces();

xsn.Add("", ""); // Gets rid of redundant xmlns: attributes

StringWriter pw = new StringWriter();

xs.Serialize( pw, new Test( "1", "2" ), xsn );

Console.WriteLine( pw.GetStringBuilder().ToString() );

You’ll get an InvalidOperationException stating that “Test cannot be serialized because it does not have a parameterless constructor.” A quick look at the documentation (or a search which may lead you to this post on StackOverflow) will get you the answer: add a parameterless constructor and mark it private. Run the test code again and this time no exception will be thrown; however, you probably won’t be expecting this result:

<?xml version="1.0" encoding="utf-16"?>

<test />

The solution? Add a setter to each of your serializable properties. Don’t need a setter because the class is immutable? Mark it as private and you’re good to go. Tough – you’re going to need one anyway, private/internal/protected setters don’t appear to work. If you must use XmlSerializer you should throw a NotImplementedException from these setters, but in my opinion the resulting contract clutter implies you should simply avoid XmlSerializer altogether.

Although this behavior makes sense in light of how XmlSerializer can be used for both serialization and de-serialization, what threw me off was that no exception is thrown – the contract doesn’t require a setter property, and the serializer output is corrupt. Beware!

(Cross-posted on Stack Overflow) Update (17-Sep-08): Corrected the answer to an integer arithmetic question ("rotate to the right" was obviously incorrect - I was careless when I wrote this. Thanks, Kuperstein!) and some formatting adjustments. Preparation I never interview the applicant on the first phone call. This doesn't give me the time to go over the candidate's CV and consider if there are points I'd like to bring up on the interview, such as specific work experience or glaringly missing skills. Additionally, setting up a specific time for the phone interview allows the candidate time to mentally prepare, drink a coffee and settle down in a quiet spot somewhere. Just puts everyone at their ease. When first calling the candidate I always take the time to introduce the company I work for, a synopsis of what we do and a general description of how the company operates. I then proceed to inquire if the applicant sees this as a potentially interesting place to work in and whether or not they have any questions; you'd be surprised at the time this can save, for example it's not always obvious whether or not the applicant is interested in working for a start-up company, or alternatively may not find the problem domain engaging. Getting to Know The Candidate To start the interview off, I usually skim over the candidate's CV and select two or three interesting projects that the candidate was involved in. I ask the candidates to describe their involvement (sometimes, but not often, going into a bit of detail if the domain is familiar to me), their specific contribution to the project, whether or not they had fun and why. This usually gives me a sense of what the candidates are looking for; are they heavily into design? Are they enthusiastic about a specific technology, and if so, do they have a sound reason for it? Were they frustrated by administrative issues, did they try to improve their working environment? Technical Questions Once those formalities and niceties are out of the way, I turn to my ever-growing collection of interview questions and select a small subset to present to the candidate. For example, a typical interview may include the following questions: Integer Arithmetic Does the candidate have a decent grasp of bits and bytes? I consider this a must-have; a candidate that fails this part has no chance in hell of tackling even the most trivial native code. - "Take an integer. How do you turn the 7th least significant bit off?" This question alone removes about half of the applicants from the equation. Some people tell you "you need to apply bitwise AND, but I can't remember the number you need to AND with" -- this isn't what you're looking for. A good answer would be something like "x &= ~(1 << 6)".

- "How would you quickly divide an integer by eight (8)?" A good answer is, you shift right by three. A better answer would be "is the integer signed or unsigned?", with a bonus for Java developers who know the difference between >> and >>>.

Pointers and Pointer Arithmetic This really depends on what your company does. Most developers today don't need to know in practice the particulars of pointers and pointer arithmetic optimizations, but if you're developing a highly scalable system and/or one with serious performance considerations, this is a very good measure of how likely the candidate will be able to tackle such challenges. - "How big is a pointer?" The only correct answer is, "depends on the architecture". 32-bit operating systems will have 32-bit pointers, 64-bit systems will have 64-bit pointers. Anything that doesn't fall into these two categories is not relevant for my purposes, and likely yours as well. If the candidate fails this question I usually mark this section as "failed" and move on.

Floating Point Numbers - "What is NaN?" A programmer who can't answer this question has never really worked with floating point numbers.

- "How are floating point numbers represented in a typical modern architecture?" Failing this question is not a deal-breaker, but answering it correctly will score the candidate a lot of points. "sign, exponent, mantissa/fraction/significand" is a sufficient answer.

Essential Data Structures and Algorithms This is the bread-and-butter of programming. A candidate should exhibit robust familiarity with commonplace data structures (hashtables, linked lists, trees etc.) and algorithms (sorting, graph traversal). - "Describe how quicksort works. Elaborate on its performance characteristics." Any professional developer should be able to explain quicksort in a few minutes, know its average- and worst-case complexity, and recognize pathological cases (typical school-level quicksort implementations exhibit horrible performance on pre-sorted data).

- "How are hashtables commonly implemented?" A candidate should be able to describe the concept of a hashing function (uniform distribution) and how it relates to the internal data structure (normally an array of buckets).

- "What backing data structure would you choose for a simple text editor?" The classic answer is arope, but it's unlikely that a candidate will be familiar with this data structure. A more likely response would be a "linked-list of strings", in which case you should ask about the complexity of various editing operations (deleting a line, inserting a line, deleting a character etc.) This question typically takes slightly longer to answer but I've found that it gives me a good measure of the candidate's intuition in choosing/analyzing data representations.

Threading and Sychronization This is fast becoming the most important subject with which to distinguish the truly brilliant candidates from the merely competent; the ubiquity of multithreaded code nowadays also means that these questions can be used to quickly weed out the unworthy candidates. - "Describe one common way of synchronizing access to a shared resource." This is just a starter question, and if the candidate takes more than a few seconds to come up with an answer (mutex, semaphore, monitor or "synchronized" for Java developers, "lock" for C# developers) it's usually a good sign that they don't have any reasonable experience with multithreaded development.

- "You have a shared cache with a very good hit ratio. How would you synchronize access to the cache with as little performance overhead as possible?" The answer is trivially a read-write lock which can accomodate multiple readers and a single, exclusive writer. Where appropriate, ask how the candidate would implement such a lock.

- "Describe a nontrivial problem that you've had with threaded code." Responses usually fall into one of three categories: either (1) a reasonably experienced multithreaded developer would never make the same mistakes, in which case the candidate obviously isn't one; (2) a classic race-condition/deadlock/etc. scenario, which merely tells you that the candidate has some experience with multithreaded code and appears capable of tackling such challenges; or (3) rarely, a candidate may have a genuinely interesting "war story," in which case you'll probably want to hire them right away.

Peripheral Technologies Approach this section with caution. A canditate that's familiar with a great deal of today's hot technologies may prove completely incompetent, whereas it's quite possible to find brilliant programmers that have never touched COM in their lives. I still like to get a sense of how "in touch" the developer is with contemporary technologies; familiarity with tools and technologies can definitely be a tie-breaker between two promising candidates. - "Are you familiar with COM? Describe an interface which any COM object must implement and its methods." Anyone who's even a bit familiar with Windows software development should be able to answer this question fully. For those unfamiliar with COM, describe it in a few words and ask the canditate to guess what the required methods of IUnknown are.

- "What is a well-formed XML file? Give two examples of errors in an XML file which would render it non-well-formed." XML is prevalent in almost every software development domain. A candidate which cannot answer these questions (and doesn't have a very good excuse) will not go past this interview.

- "What's XPath? Explain what a predicate is and how it's used." This is not most-have. I'd expect serious developers to at least have an idea of what XPath is. The second question is there to differentiate those who profess to know XPath from those who've actually done work with XPath and/or XSLT.

- "If you had to verify the input of an e-mail address field, how would you go about it?" There are only two valid answers to this question: "I would use a regular expression," or "I'd like to use a regular expression, but I know that fully matching e-mails according to the RFC is insanely complex, which is why I'd get a proven library to do it for me." If the canditate is being a smart-ass you can always ask them about the performance characteristics of commonplace regex engines (which have pathological cases).

- I also like to just toss buzzwords (Ruby, Boo, JSON, Struts, J2EE, WCF) around and examine the candidate's responses. It may also provide an interesting subject to ask about in a personal interview later on.

Concluding the Interview The previous section usually takes between 10 and 15 minutes. At this point I normally ask the candidate if there are any questions they'd like answered, or anything I should know before we conclude the interview. Once that'd done, I thank them for their time and tell them (even if they've failed miserably) that I will call them back in a day or two with an answer. Hope this helps, comments are welcome.

Because the Java language lacks delegates, anonymous classes are prevalent as a syntactic replacement. Non-static nested classes are also often used in the language, a feature which is conspicuously absent from C#, albeit far less necessary with that language. This brings me to the following language caveat. This may seem a contrived example, but it's a simplification of an actual issue I've encountered in the last few days. Suppose you have a generic base class, call it BaseClass<U>, which you extend with an anonymous class. Lets also assume that the extended class spawns a thread that needs to access the BaseClass<U> state: class BaseClass<U> {

void getState() {}

}

class Test {

public void test() {

final BaseClass<String> instance = new BaseClass<String>() {

public void invokeStatefulThread() {

// Create our runnable

final Runnable threadCode = new Runnable() {

public void run() {

/* 1 */ getState();

/* 2 */ this.getState();

/* 3 */ super.getState();

/* 4 */ BaseClass.this.getState();

/* 5 */ BaseClass<String>.this.getState();

}

};

new Thread( threadCode ).start();

}

};

instance.invokeStatefulThread();

}

}

I'll spare you the guessing game. Here's what happens with each of the five invocations of getState():

- Compiles and behaves as expected.

- Obviously won't compile; this points to the Runnable.

- Obviously won't compile; the superclass of a Runnable is Object.

- Although this is the correct raw class, it won't compile because "No enclosing instance of the type BaseClass<U> is accessible in scope", even though the raw type should still be accessible and U can be inferred.

- Although this appears to be the correct fully-qualified form, this does not compile with a "Syntax error on token(s), misplaced construct(s)" error.

The Java language specification section on "qualified this" is very brief and does not mention generics at all (does "class name" include bounded type parameters?). Oddly enough, moving the class declaration outside of the test method actually lets 4 compile -- if there's a clue there, I haven't figured it out yet.

I still haven't found a syntactically-correct way to access BaseClass<string>.this, other than placing it in a temporary variable outside of the Runnable declaration. I searched yesterday for a couple of hours with no obvious solution in sight. Ideas are more than welcome!...

Among a lot of other tools and technologies we use Lucene here at Delver. Lucene is a very mature, high-performance and full-featured information retrieval library, which in simple terms means: it allows you to add search functionality to your applications with ease. I could spend an entire day talking about Lucene, but instead I'd like to show you how to write scripts for Lucene with Python on Windows (and thus differs from Sujit Pal's excellent instructions on the same subject matter). First, make sure you have your Python environment all set up -- at the time of writing this I use the Python 2.5.1 Windows installer. Next you'll need Python bindings for the library, so go and grab a copy of PyLucene; to make a long story short I suggest you use the JCC version for Windows which is downloadable here. Grab it and install it, no fussing necessary. Finally you'll need the Java VM DLL in your path, and this depends on which type of JRE you're using: - If you're using the Java JDK, add the mental equivalent of C:\Program Files\Java\jdk1.6.0_03\jre\bin\client to your path;

- If you're using a JRE, add C:\Program Files\Java\jre1.6.0_03\bin\client to your path.

(As an aside, you should probably be using %JAVA_HOME% and %JRE_HOME% and indirecting through those.) Now you can quickly whip down scripts in Python, like this one which took about two minutes to write: #!/usr/bin/python

#

# extract.py -- Extracts term from an index

# Tomer Gabel, Delver, 2008

#

# Usage: extract.py <field_name> <index_url>

#

import sys

import string

import lucene

from lucene import IndexReader, StandardAnalyzer, FSDirectory

def usage():

print "Usage:\n"

print sys.argv[ 0 ] + " <field_name> <index_url>"

sys.exit( -1 )

def main():

if ( len( sys.argv ) < 3 ):

usage()

lucene.initVM( lucene.CLASSPATH )

term = sys.argv[ 1 ]

index_location = sys.argv[ 2 ]

reader = IndexReader.open( FSDirectory.getDirectory( index_location, False ) )

try:

for i in range( reader.maxDoc() ):

if ( not reader.isDeleted( i ) ):

doc = reader.document( i )

print doc.get( term )

finally:

reader.close()

main()

Note the VM initialization line at the beginning -- for the JCC version of PyLucene this is a must, but even like so using the library is extremely painless. Kudos to the developers responsible!

The Java implementation for generics is radically different from the C# equivalent; I won't reiterate issues that have been thoroughly discussed before, but suffice to say that Java generics are implemented as a backwards-compatible compiler extension that works on unmodified VMs. The implications of this are considerable, and I'd like to present one of them. Lets fast forward a bit and consider a relatively new language feature in Java (introduced, I believe, with J2SE 5.0): autoboxing. A thoroughly overdue language feature, autoboxing allows the seamless transition from regular value types (e.g. the ubiquitous int) to object references (Integer); before autoboxing you couldn't simply add a value to an untyped ArrayList, you had to box (wrap) it in a reference type: ArrayList list = new ArrayList();

list.add( 3 ); // Compile-time error

list.add( new Integer( 3 ) ); // OK

Eventually Java caught up with C# (which introduced autoboxing in 2002), and with a modern compiler the above code would be valid.

With the introductions out of the way, here's a pop-quiz: what does the following code print?

HashMap<Integer, Integer> map = new HashMap<Integer, Integer>();

map.put( 4, 2 );

short n = 4;

System.out.println( Integer.toString( map.get( n ) ) );

As a long-time C# programmer I was completely befuddled when the code resulted in a NullPointerException. Huh? Exception? What? Why?

It took me a while to figure it out: because Java generics are compile-time constructs and are not directly supported by the VM, what actually happens is that the underlying container class accepts regular Object instances (reference types); the compile-time check merely asserts that n can be promoted from short to int, whereas the actual object passed to the container class (via autoboxing) is a Short! Since the container doesn't doesn't actually have a runtime generic type per se, the collection merely looks up the reference object in the map, fails to find it (I guess the Object.hashCode implementation for value types simply returns the reference value as the hash code as in C#) and returns null. Doh! *slaps forehead*

A few ReSharper tips for your viewing pleasure (according to rumors it can extend your penis too!): Alt+Shift+L locates the current file in the Solution Explorer (at least with the IDEA-style keyboard bindings we all know and love). This is particularly useful for extremely file-heavy solutions where you often want to Get Latest (or Undo Check Out) the current file. Note: you can get this behavior automatically through Tools->Options->Projects and Solutions->Track Active Item in Solution Explorer, but in my opinion it's annoying as hell. Easier unit testing: you can bind shortcuts to run or debug unit tests by context. Context means that the behavior is exactly as expected: using the shortcut with the cursor on a test will run just that test, using it on a class will run it as a whole fixture, and using it on a directory in the Solution Explorer will run all tests that reside in that directory. This is easily accomplished by going to the Tools->Options->Keyboard menu and binding: - ReSharper.ReSharper_UnitTest_ContextDebug to whichever shortcut you wish for debugging purposes (my favourite is Ctrl+T, Ctrl+D)

- ReSharper.ReSharper_UnitTest_ContextRun to whichever shortcut you'd like to use in order to just run tests (my favourite is Ctrl+T, Ctrl+T)

Note: You can get the same behavior with the ubiquitous TestDriven.net, but it makes no sense to me to pay twice for the same functionality.

Quick link: download In my work at Semingo I often encounter situations where it's impossible to unit- or integration-test a component without accessing the web. This happens in one of two cases: either the component itself is web-centric and makes no sense in any other context, or I simply require an actual web server to test the components against. Since I firmly believe that tests should be self-contained and rely on external resources as little as possible, a belief which also extends to integration tests, I wrote a quick-and-dirty pluggable web server based on the .NET HttpListener class. The unit-tests for the class itself serve best to demonstrate how it's used; for instance, the built-in HttpNotFoundHandler returns 404 on all requests: [Test]

[ExpectedException( typeof( WebException ) )]

[Description( "Instantiates an HTTP server that returns 404 on all " +

"requests, and validates that behavior." )]

public void VerifyThatHttpNotFoundHandlerBehavesAsExpected()

{

using ( LightweightWebServer webserver =

new LightweightWebServer( LightweightWebServer.HttpNotFoundHandler ) )

{

WebRequest.Create( webserver.Uri ).GetResponse().Close();

}

}The web server randomizes a listener port (in the range of 40000-41000, although that is easily configurable) and exposes its own URI via the LightweightWebServer.Uri property. By implementing IDisposable the scope in which the server operates is easily defined. Exceptions thrown from within the handler are forwarded to the caller when the server is disposed: [Test]

[ExpectedException( typeof( AssertionException ) )]

public void VerifyThatExceptionsAreForwardedToTestMethod()

{

using ( LightweightWebServer webserver = new LightweightWebServer(

delegate { Assert.Fail( "Works!" ); } ) )

{

WebRequest.Create( webserver.Uri ).GetResponse().Close();

}

}The handlers themselves receive an HttpListenerContext, from which both request and response objects are accessible. This makes anything from asserting on query parameters to serving content trivial: [Test]

public void VerifyThatContentHandlerReturnsValidContent()

{

string content = "The quick brown fox jumps over the lazy dog";

using ( LightweightWebServer webserver = new LightweightWebServer(

delegate( HttpListenerContext context )

{

using ( StreamWriter sw = new StreamWriter( context.Response.OutputStream ) )

sw.Write( content );

} ) )

{

string returned;

using ( WebResponse resp = WebRequest.Create( webserver.Uri ).GetResponse() )

returned = new StreamReader( resp.GetResponseStream() ).ReadToEnd();

Assert.AreEqual( content, returned );

}

}

We use this class internally to mock anything from web services to proxy servers. You can grab the class sources here -- it's distributed under a Creative Commons Public Domain license, so you can basically do anything you want with it. If it's useful to anyone, I'd love to hear comments and suggestions!

So you want a web project, a build system and a reasonable IDE to take care of the annoying details for you, right? The good news are that it's actually quite possible and there're many ways to do this. The bad news are that it's nigh impossible to get them to play along if you don't already know how to do that. It took me days to find a solution that finally seems to work, and I'd like to share it with you. I'm probably missing a few important details or did something really really stupid along the way (I'd appreciate comments!), but this process does seem to work. I'm not going into essentials of Java-based web development here -- if you want a more basic explanation of the terminology, post a comment and I'll see what I can do... 1. Goal I want to: - Use a common, standard and powerful IDE to edit and debug my Java code, and preferably provide a usable GUI interface for dependency and project management;

- Use a common, standard servlet container to host my servlet and still be able to control and debug everything from the same IDE;

- Have a convenient way to handle internal-and-external dependencies without worrying too much about the details;

- Be able to quickly compile, test and package my servlet for deployment;

- Understand as little as possible about the dependency stack of the tools involved

I'm going to tell you how to achieve most of these goals, with two glaring omissions: I won't show you how to do testing (I haven't successfully managed servlet unit testing so far -- different post on that) and I can't help but delve into some of the more annoying details involved with these tools and their dependencies. Sorry about that. Additionally, some of the information here applies even if you use different tools, but you're bound to face issues not covered here; don't assume I know more than you do -- seek the answers, post them somewhere, and maybe the next person will actually find what they're looking for! 2. Tools The tools used are: - J2SE JDK is an obvious must-have. Version used: JDK 6 update 3;

- Eclipse (but please don't download it just yet) for code editing, debugging and project management;

- Web Tools Platform: this is the Eclipse plug-in that adds web development capabilities to the IDE, including J2EE dependency management, hosting and running servlets from within the Eclipse workspace etc. This would be a good time to run along to the WTP web-site and download the Web Tools Platform All-In-One package. I only used the release version (2.0.1 at the time of writing this), so if you use another version your mileage may vary;

- Apache Maven is the newfangled build system from Apache slated to replace ant. I've used it for the last few days and so far it appears to be quite robust and even fairly well-integrated into Eclipse (see next item). Version used: 2.0.8;

- M2eclipse is the Eclipse plug-in for Maven integration. I've only found one problem with it so far, which I'll detail later on;

- Apache Tomcat is a solid choice in servlet containers. It's robust, fast and open-source, and has terrific Eclipse integration. I haven't given any of the other containers a serious whirl yet though.

3. Preparations Unlike Visual Studio, with the tools mentioned above there's no straightforward installation procedure. You'll have to designate at least a workspace directory (where your Eclipse projects, settings etc. go) and some location where the tools themselves go. For me, it's C:\Dev\Eclipse and C:\Tools respectively. - Setting up Java:

- Install the JDK and remember where it was installed (nominally in %PROGRAMFILES%\Java\jdk1.6.0_03)

- Set up a system-wide JAVA_HOME environment variable pointing to the same directory

- Setting up Maven and Tomcat:

- Extract both archives to your designated directory (e.g. for Maven it would be C:\Tools\apache-maven-2.0.8)

- Add the Maven bin directory to your PATH environment variable (user- or system-wide, depending on your preference)

- Add whichever J2EE libraries you desire from the Tomcat installation to your class-path. If you have no idea what I'm talking about, you'll probably just want to set the CLASSPATH environment variable to your equivalent of c:\tools\apache-tomcat-6.0.14\lib\servlet-api.jar;c:\tools\apache-tomcat-6.0.14\lib\jsp-api.jar

- Setting up Eclipse:

- Extract the WTP all-in-one package (which contains Eclipse itself) to your designated directory (e.g. C:\Tools\Eclipse)

- Load Eclipse and point it to your designated workspace location

- Install M2Eclipse:

- Go to Help->Software Updates->Find and Install..., select "Search for new features to install" and click Next

- Click on New Remote Site..., use M2eclipse or whatever for the name and http://m2eclipse.codehaus.org/update/ for the URL

- Click on Finish and let Eclipse install the M2Eclipse plug-in

- Set up a web server runtime for Eclipse to host your servlets in:

- Open Window->Preferences...

- Under Server select Installed Runtimes and click on Add...

- Choose (from Apache) the Apache Tomcat v6.0 runtime and click Next

- Enter the Apache installation directory (e.g. C:\Tools\apache-tomcat-6.0.14) in the appropriate location and click Finish

4. Creating a new web project First off, you must create the actual project, directory structure etc. To do this: - Open a command prompt, go to your Eclipse workspace directory

- Decide on your Maven group and artifact IDs; it's worth noting that the artifact ID is also the directory name for the project

- Type in mvn archetype:create -DgroupId=your.group.id -DartifactId=your.artifact.id -DarchetypeArtifactId=maven-archetype-webapp

- You'll notice that a new directory was created under the workspace root

- Edit the Maven project descriptor POM.XML in the newly created directory:

- Add (after the <url> tag, although I'm not sure the order matters) the following section:

<profiles>

<profile>

<id>servlet</id>

<activation>

<activeByDefault>false</activeByDefault>

</activation>

<dependencies>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>servlet-api</artifactId>

<version>2.5</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>javax.servlet.jsp</groupId>

<artifactId>jsp-api</artifactId>

<version>2.1</version>

<scope>provided</scope>

</dependency>

</dependencies>

</profile>

</profiles> - Under <build>, add the following section:

<plugins>

<plugin>

<groupId>org.mortbay.jetty</groupId>

<artifactId>maven-jetty-plugin</artifactId>

</plugin>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.5</source>

<target>1.5</target>

</configuration>

</plugin>

</plugins> - You can now thank my colleague Aviran Mordo for finding out this bit of Voodoo.

- In the command prompt, now enter the project directory

- Type in mvn eclipse:m2eclipse -Dwtpversion=1.5 to create an Eclipse project

- Run Eclipse if it's not already started, then from the package explorer right click anywhere and click on Import...

- Choose General->Existing Projects into Workspace. For root directory pick the workspace directory

- Choose the new project and click on Finish

- At this point you may encounter a "Java compiler level does not match the version of the installed Java project facet" error. If that's the case, just right-click on the error (in the Problems view) and select Quick Fix, which will allow you to change the Java project facet version to 6.0. If this isn't what you want, you probably know enough to resolve the issue on your own...

- You'll need a src/main/java directory as a root source folder (as per the Maven convention). Right-click on the project, select New->Source Folder and type in src/main/java.

- Finally, in order to execute or debug the project on an actual running server, right-click on the new project and select Properties. From there go to the Server tab and select the runtime you created in the previous chapter.

At this point you have a Maven web project with a corresponding Eclipse project ready for editing in your workspace. In practice you will have to do several things to have any meaningful results. - Add your own code into the mix, such as a servlet. When adding a new servlet (via right-clicking the project, New->Other and choosing Web->Servlet) your WEB.XML file is automatically updated with the new servlet.

- Add your own dependencies. Maven handles dependencies quite well; for instance, in order to actually create a servlet you're going to need servlet-api.jar in your classpath; the easiest way to do this is to right-click the project, select Maven->Add Dependency and then simply type in servlet and choose javax.servlet servlet-api.

- When you wish to run or debug your servlet, right-click on its Java file and select Run As->Run on Server (or Debug, as appropriate). Your applet should be happily up and running.

5. Converting an existing project to Maven I'm not sure how to go about doing this for web projects, but converting regular projects to use Maven is actually pretty straightforward; move your sources to the appropriate directories according to the Maven conventions, right-click the project in Eclipse and choose Maven->Enable Dependency Management; this will implicitly create a Maven project descriptor for you (POM.XML) and that's pretty much it. From that point on your Eclipse and Maven projects should peacefully coexist, allowing you to leverage both tools for your purposes.