This post attempts to summarize and clarify a lecture given at the Botzia (Israeli Java user group) meeting on May 3rd, 2012. You can view the presentation in its entirety on SlideShare:

What is Scala?

Scala is a modern, statically-typed language designed to run on the Java platform. It's commonly perceived as an "evolutionary Java" and with good reason: Scala adds powerful features while significantly reducing Java's verbosity. It is a highly practical language in that it offers a very straightforward migration path for developers on existing platforms: Java developers will feel right at home with Scala, and a .NET developers will not have to give up on a lot of the advantages C# has over Java.

For organizations with a preexisting codebase Scala offers an excellent balance between power and feature set, as well as perfect interoperability with existing Java code: although you certainly don't have to, you can freely use familiar libraries without a hitch, call into existing code and, for the most part, use your existing tool-chain with little or no impact.

Why did we pick Scala?

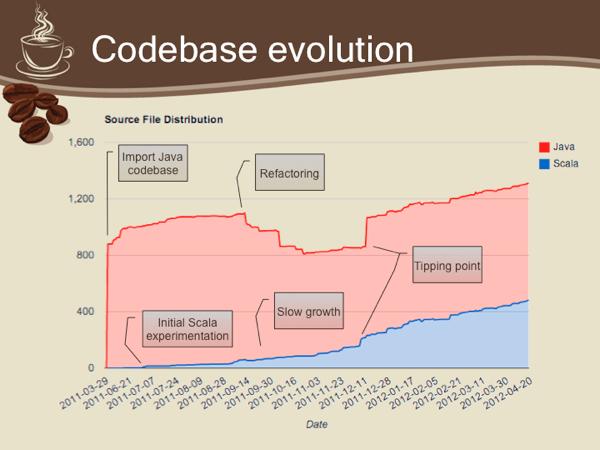

As mentioned above, Scala offers a very compelling migration path from an existing Java codebase. When we were setting up the new R&D center at newBrandAnalytics, we were already serving paying customers via a complex codebase. A complete rewrite was out of the question as we had contractual obligations, and we had to keep improving the existing product in order to maintain the competitive edge; it became very clear that we needed to maintain the existing codebase and refactor it incrementally.

An additional data point was that our core team members were highly experienced with Java development and quite comfortable with the platform; we also had a distinct preference for statically typed languages, which ruled several alternatives (particularly Clojure) right out. Scala seemed like a great compromise in that it allowed us to maintain and extend the existing codebase while enjoying advanced language features and improved veracity at little or no risk to the product itself.

How should I "sell" Scala to my boss?

"Safe" choice with proven track record: Scala is not an entirely new language, and has reached a maturity tipping point at 2.8; over the last two years the language has been gaining significant momentum, and has a number of strong advocates and success stories, including Twitter, Foursquare, LinkedIn and a whole host of others.

Scala as a better Java: You don't have to go all-in on Scala's advanced feature set, but can instead think of Scala as an improved Java with type inference, closures, advanced collection framework, traits and pattern matching; these features alone will increase your developers' happiness by an order of magnitude, and productivity will see a corresponding improvement.

Less code ⇒ less bugs: Scala code is far more concise than corresponding Java code, an helps the developer focus on the "what" instead of the "how". The resulting code is usually much shorter, clearer and simpler, which in turn helps lower bug count.

Helps hire better engineers: Great engineers not only love working with the latest technologies, but also love practical, incremental improvements to well-respected technologies. Merely saying "we use Scala" will help attract the sort of seasoned, reliable and professional engineers that you most want to hire.

Where does Scala put you at risk?

Learning curve: Although Scala largely derives from Java, it's a whole new language with a lot of features that takes time to master. Don't expect your developers to be productive from day one; instead, provide opportunities for experimentation and encourage iterative development (and yes, give your developers time and mandate to throw away badly written early code and redo their work if necessary). There is actually a lot of material on Scala and an active and vibrant community; specifically, your developers can easily find help (and answers) on StackOverflow.

Rough edges: Scala is fairly new, and consequently a lot of things that you take for granted with Java are not as mature or robust with Scala. IDE support is a moving target: Eclipse and IntelliJ IDEA both have actively developed Scala plugins and both have occasional issues (spurious error highlighting, lackluster performance, wonky debuggers). The common build tools support Scala, but don't play as nicely; for example, only the native build tool (sbt) support incremental compilation. Even the compiler itself is not entirely bug-free, although these are getting very rare indeed. Bottom line: expect occasional problems, and be patient while working around them; even better, encourage your engineers to participate in the community, file bugs and even offer patches where possible.

Production characteristics: While it runs on the JVM, Scala is not Java; there are subtle differences that you should be aware of when maintaining large-scale or highly-available software. Scala generates a great deal of synthetic code, which puts additional pressure on the PermGen space; synthetic stack frames may also exhibit significantly increased stack usage. Scala also creates a lot of intermediate objects at runtime, which results in added eden generation churn. You may want to profile your applications and tune the GC accordingly.

What's the bottom line?

Scala is fantastic. Our team at newBrandAnalytics is remarkably happier with Scala despite the occasional hitches, and I doubt any of us will consider going back to Java given the option. As long as you keep in mind that on the bleeding edge you may get cut, I definitely recommend taking the plunge, with contractors and die-hard traditional enterprises the possible exceptions.

New brand what now? newBrandAnalytics is a newish startup (less than 2 years old) which started out with a simple premise: the internet is a goldmine of customer opinions, to the point where it's impossible to track and respond to it all with traditional techniques and purely human oversight. We mine the net for opinions, reviews, ratings and other data; we analyze this data and break it down into a structured form that can then be aggregated and measured against the customer's own operational metrics. To illustrate, one example of what we do is take the impossibly huge number of "Just ate at McDonalds in Copenhagen airport, it was horrible! Stale fries, messy bathroom and facetious service!" tweets (alright, maybe I'm giving Twitter users too much credit  ), turn them into what amounts to a scoreboard (cleanliness: -1, freshness: -1, service: -1), and finally crunch numbers until we can give the customer a dashboard that says, in plain English, "there's something rotten in the kingdom of Denmark." Not only that, you can easily drill down and contact the author of the complaint, pull up historical graphs to see if there's a growing negative trend in service quality for that area etc. And that's just scratching the surface. ), turn them into what amounts to a scoreboard (cleanliness: -1, freshness: -1, service: -1), and finally crunch numbers until we can give the customer a dashboard that says, in plain English, "there's something rotten in the kingdom of Denmark." Not only that, you can easily drill down and contact the author of the complaint, pull up historical graphs to see if there's a growing negative trend in service quality for that area etc. And that's just scratching the surface. The company itself is US-based, and is now just past the incubation stage; there's a steadily growing revenue stream, and now the business is catching up with what was essentially proof-of-concept technology, originally developed by an outsourcing firm (which actually did a surprisingly good job). The company has decided to bring engineering in-house, hired a veteran CTO and is now in the process of setting up its R&D center in Ceasarea, Israel. What's in it for you? - Join a small, tightly-knit team of extremely smart, experienced engineers who absolutely love their profession!

- Work with cutting-edge technologies, including Node.js, Scala and Cassandra/Hadoop:

- We're all in when it comes to DevOps;

- Take your pick on tools. We don't care if you use Windows, Mac or Linux, as long as you have what you need to be productive. In fact, you get to pick your own machine spec;

- We're big believers in open-source. Team members often (some regularly) contribute to open-source projects, and they're pervasive in our technology selection;

- Work at a revenue-generating startup with huge promise;

- Competitive compensation package;

- Terrific working conditions: less traffic, noise and air pollution and much more space compared with most locations in the Gush-Dan area

What's in it for us? We have several positions to fill. Officially, these are: QA automation engineer, frontend engineer, senior versatile backend engineer. In practice, we're just looking for really smart people that can help us get things done faster and better. Proficiency, or talent and inclination to become proficient, in any of the following areas is a definite plus: - Java and associated technologies (Scala experience will impress us further still!);

- DevOps, specifically: monitoring, deployment automation and build automation;

- NLP, machine learning and text analysis;

- Large-scale data crunching (experience with modern NoSQL/big-data solutions, e.g. Hadoop/Pig, GigaSpaces or Cassandra, is a major plus);

- Testing methodology: we have several major subsystems with separate testing requirements (data acquisition, NLP and UI automation, to name but a few);

- Client-side and client-facing development (MVC frameworks, JavaScript, CSS, HTML);

- HTTP crawlers and associated domains (bot idioms and challenges, scale and cost concerns).

Interested? Send in your resume to tomer@newbrandanalytics.com or contact me!

With the question of what DevOps is all about out of the way, let’s consider the challenge of setting up an effective DevOps team. I’ll try to present this as a series of questions, which in order hopefully comprise a good overview of the process. I certainly can’t cover everything in s single post, so let’s start with the basics: Do I even need a DevOps team? Not necessarily. What you do most certainly need, and probably already have, is an application engineer role. The formal makeup of such a team derives from business necessity: one-man ISVs handle all aspects of the application on their own, including the operational aspects, and are therefore not interesting for the purpose of this discussion. But even with garage startups that comprise very few people (as low as three, in practice), someone always ends up taking the DevOps mantle; there’s always the one guy who ends up taking care of the hosting, deployment and all that other business because someone has to. As your organization grows, as your engineering throughput exceeds your operational capacity and the complexity of your product portfolio increases dramatically, that’s when you need to consider an actual team. How do I split up the work? There is no absolute truth here; the answer depends on the size, organizational structure and corporate culture of your specific organization. Your requirements may be satisfied by having just one übergeek take care of everything; in other cases (such as multiple long-term projects, very high system complexity or simply too much work for one person) you may need to divide the work up into more specific agendas. There are numerous strategies you can employ, but here’s a few ideas to get you started: 1. Division by domain This is a fairly trivial model: figure out what work gets done, categorize it into a reasonably small number of domains, and assign them to specific team members according to their respective skillsets, interests and aspirations. To illustrate, consider the following domains: capacity planning, monitoring, high availability, infrastructure (networking, storage) and deployment. Your engineers probably already handle all of the above. While this model has the benefit of generally placing the most qualified personnel in charge of any particular domain, be careful to have a least a pair of engineers on each domain so you can scale up when necessary, and to ensure knowledge is retained. In case of small teams (3-5 people) this means each person is likely to have more than a single area of responsibility; depending on your team members, the ensuing overhead (or even personality clash) may have a significant impact on the efficiency of your team. 2. Division by feature Another fairly simple strategy is to have shared ownership of all the fundamental tools and processes your team employs, and scale by assigning specific features to team members. Not everyone on the team has to have the same level of expertise on every domain, but every team member does need to have a good grasp of all aspects of your work. The level of expertise of any specific team member becomes an evaluation criterion when assigning a new feature, project or issue, and has to be weighed alongside other criteria, such as the need to challenge less-experienced team members, pressing schedules and the other myriad details that managers have to handle. Ideally, any feature can be assigned to any team member and executed effectively. The primary drawback to this model is efficiency: it is irresponsible to expect the same level of productivity for a specific task from each team member, which makes efficiency the direct responsibility of whomever divides up the assignments, usually the team lead or group manager†. Unfortunately, not all managers are apt at identifying bottlenecks and shifting responsibility around to optimize for efficiency; whether this model suits your organization or not depends on your ability to effectively track your team members’ abilities and provide effective guidance. A possibly bigger challenge here is knowledge sharing. As the team grows, so does the communication overhead and, perhaps more importantly, the opportunity for miscommunication, necessitating meticulous documentation and proactive knowledge management. The ensuing overhead can kill productivity if not carefully managed. † Small-enough teams may be able to split the work up by consensus, but success depends on the social composition of the team and is hard to predict. 3. Divide and conquer The problem can also be attacked from a completely different angle. Your team comprises members with different skills, mindsets and even ambitions; sometimes these do not mesh together naturally, possibly due to too much overlap or, in other cases, too little. This can happen for any number of reasons, but let’s assume that this is not a case of simple ego clash or, as Rands put it, a toxic asset; your team members are hard workers, they like each other well enough and want to get things done, but they just can’t agree on the mechanics. It’s time to divide and conquer. There are two ways to go about doing this: the simplest is to split up domain ownership between the groups. It’s not likely that anyone will be truly satisfied with your choices (if the division of expertise or interest is natural, there probably wouldn’t be any significant friction in the first place), but the grumbles will eventually subside, if only through relief that the constant bickering is over. When the division of responsibilities is clear, people feel there’s less at stake and are more likely to concede a draw in an argument (don’t expect capitulation here, these are engineers after all…). Once the emotional involvement is out of the equation, engineers are quite rational; if they can’t make a convincing argument and they’re not personally vested in the result, the argument ends with an amicable “yeah, alright, it’s up to you.” Unfortunately, turning this to your advantage in the long-term entails a clever assignment of domains, balanced well enough so that both sides rate their level of interest above the “meh” threshold. How to do that is entirely up to you. The second way is to introduce a new element into the mix. In particular, if the areas of contention typically resolve around choice of tools, it might be wise to assign ownership of tool selection to a third party; for instance, friction may ensue over the choice of monitoring system. You may experience heated arguments about the relative merits of e.g. Nagios and Zabbix, whereas a level-headed analysis would show that a customized system based on Graphite is your best strategy. Or perhaps the debate is on httpd vs nginx; or any strategic technical decision, really. See what I’m getting at here? At a previous employer we ended up defining an Infrastructure Architect role, an operations counterpart to the System Architect role in R&D. These rolls fill very much the same need, except on opposite ends of the spectrum; whereas the SA might pick Hadoop and log4j for data storage and logging respectively, the IA will likely pick Graphite and LogStash to monitor the cluster and aggregate logs, respectively. Interestingly, the necessary skillset is also quite similar. Identifying and mentoring likely candidates is a subject that I’ll likely tackle in a future installment. To be continued There’s much more to say; this is an entirely new branch of the industry, with few acknowledged best practices and even fewer well-known truths. The main point here is that you have to experiment in order to build an effective team, and manage it efficiently. I’d love to hear actual feedback on what works and what doesn’t, and likewise I’d be interested in feedback regarding what I should focus on next: which is more interesting, recruiting techniques and pains, or building an effective interface with the other groups in the organization? Or perhaps an entirely different domain, such as knowledge management techniques? Fire away…

Sometimes you know it’s time to move on. I’ve been working at Sears (née Delver) for just under four years, making this the longest stretch in my career. Even so, my tenure at Delver has seen several major upheavals, including a name change (we originally started off as Semingo), product strategy shifts (Delver started out as a people search engine, similar to what pipl.com are doing today), launching our product, failing to survive the market crash and shutting down, being bought outright by Sears and a drastic personal shift from R&D to operations. So yeah, a lot has happened in the last four years: I’ve worked alongside some amazingly smart people, helped build and take care of systems way more complex than any I had encountered before, and perhaps even learned to curb my temper a little bit. Delver/Sears is a great company to work for, but it’s time for me to move on.

As of two weeks ago I’m a software architect working for newBrandAnalytics, a startup company that provides social business intelligence for various industries; I’ll skip the business spiel as you can go ahead and read about our solutions and existing customers. Suffice to say that we handle massive amounts of data from various sources (including social media such as Twitter and Facebook); scaling concerns aside, this also entails bleeding-edge text analysis and NLP, rapid response to growing (and changing) customer demand and other wonderful aspects of complex, scalable software systems built to support an actual business. Exciting times are ahead! Coincidentally, we’re hiring! Our careers page isn’t up yet, but we’re looking for top notch engineers, QA engineers and NLP/algorithm specialists for our Israeli R&D center. I’ll update this post with more concrete details later on, but will leave you with the following points to consider in the meantime: - nBA is a fresh, privately-funded company with a fast-growing customer base;

- We’re a core group of extremely smart, experienced people who love (and know how!) to get things done;

- Tackle challenges such as massive data volume, increasing scale and deceptively simple business requirements which translate to bleeding-edge software;

- The Israeli R&D center is the only in-house engineering center for nBA. Employees have unprecedented influence on the company, as well as direct communication with customers. There’s nothing like actual business feedback to motivate and ensure success!

If any of this resonates with you, go ahead and send your CV to jobs@newbrandanalytics.com, or contact me directly at tomer@tomergabel.com.

(Cross-posted on the Delver Blog) A little while ago I posted a job opening for the application engineer position at Delver, and one of the replies caught my interest: “so it’s a DevOps position?” A Google search later and I was astounded to find what I tried to explain has since grown into a fully fledged industry trend. I’ve learned to be mistrustful of such trends; in my experience they tend to inflate and deflate regularly, and if you try to keep abreast of all the proposed improvements to the development process you’re going to drown in overhead. Still, a critical percentage of these trends have a valid rationale driving them: unit testing, concurrency constructs, event-driven application servers, RESTful interfaces – all of these have very solid theoretical and/or practical reasoning and have had significant impact on the software development field. An additional commonality is: each took several years to gain acceptance in leading R&D teams, and several more to become ingrained methodology. The key word here is risk management, which is typically avoided or ignored altogether by the common developer. Don’t get me wrong: I come from a purely R&D background, and have shared that trait for years. What started me on a different line of thinking was the distinct pleasure of being woken up, once too often, by some poor NOC operator in the middle of the night, and getting mad enough to do something about it. Like most R&D personnel I was largely oblivious to the pains of deployment, availability, scaling, production troubleshooting and customer support, and had to learn my lessons the hard way. I believe most R&D people aren’t more minded of the pains inherent in each of these domains because of the simplest of reasons: they’ve never been challenged to do so. This is where “DevOps” comes in. An application engineer (app engineer or “devops guy” if you will) has two primary objectives: - Guide the R&D team in risk assessment. Having a software-savvy operations team member participating in design reviews is a huge boon to risk management; a better app engineer will want to participate in the design process itself, not necessarily designing the actual feature†, but even a quick overview of the proposed design is usually enough to provide operational feedback. This, without fail, results in a better design: clearer error-handling semantics, better monitoring and configuration facilities, high availability baked into the design, and induction into the deployment/administration toolchain concurrently with development efforts. This in turn leads to much better overall estimates and reduced failure rate.

- Keep the system up and running! This entails more than just observing the monitoring system (in my opinion, the less time you spend that way the less you are likely to have to, ad vitam aut culpam). The application engineer is in the relatively unique position of being both the consumer and producer of his or her own tools; this is where the wheat is separated from the chaff: a great app engineer will forever strive to improve and automate every nonfunctional aspect of the system, diligently working towards that asymptotic 100% uptime‡. DevOps personnel are the go-to people for getting systems off the ground; they’ll sketch the solution out, provide short- and long-term plans for deployment, monitoring and administration solutions both system-wide and component-specific. They’ll devise automatic tools to identify problems and anomalies, they’ll work ever-more-specific endpoints into their monitoring system, and they’ll be happy doing it because contrary to nearly any other position in the industry their interests and the business’s inherently converge.

Both DevOps and management would like nothing more than a clean, orderly universe in which systems do not fail, no data is ever lost and the system performs optimally on as little hardware as possible. Management’s business is budget and revenue; app engineers simply do not want to be woken up in the middle of the night. Next up: Growing a DevOps organization, stay tuned! † While not mandatory by any means, some design cycles can significantly benefit from an operational perspective; examples include static content management for websites; high availability for various system components; and any subsystem with external dependencies. ‡ A DevOps position is inherently multidisciplinary; for example, R&D background can significantly assist in troubleshooting. design reviews and in rolling your own tools. Strong system analysis skills, however, may be even more important, as they enable the two most important functions of the application engineer: spotting subtle holes in the design phase, and under-fire troubleshooting (which often requires the elusive ability to rapidly - but accurately - jump to conclusions).

When a company is acquired by another, some sort of restructuring is inevitable. As Delver’s acquisition by Sears Holdings became reality, it was also obvious that significant changes were required to how we operate. The first and most pronounced of these changes was that our social (or socially-connected, if you’re picky) search engine, the first product of its kind – we have enough ego to kick ourselves hard now that Google’s version is out – was scrapped, and the entire team was put to work on a new product for Sears Holdings. This, of course, meant restructuring the R&D team. One of our tenants at Delver was that everything is open to interpretation, critique and improvement. As an R&D team we were always relentlessly self-improving; I believe my two years at Delver were perhaps the best I have ever experienced professionally. I’m happy to say that this approach still prevails under Sears Holdings, and we’ve taken the first few months under the new management for some serious introspection, trying to learn everything we can from the mistakes we made while still working under the Delver banner. I believe the organization has improved across the board with these sessions, resulting in significant improvements to everything from recruiting, HR and managerial processes to source control, configuration and release management. But as a developer I felt I was hitting a professional plateau. As the new product’s specs took shape I was initially meant to take charge of the search engine implementation, continuing my original position at Delver. After nearly two years of working on search it became obvious to me that it is a very broad and nontrivial domain, and that to do a good job I will have to truly specialize in search. While I knew I did not want to continue working on the search engine, I also knew that the other developer positions would not satisfy me. While the product was being specified I kept busy with tasks that were not directly related with the product itself: setting up an integration testing framework (not trivial with a system comprising both Java and .NET components, and which integrates a significant number of 3rd party products), defining various development processes like version and branch guidelines, and finally implementing a proper Java build system that still drives our builds today. The common ground here is that, for the most part, the greatest enjoyment was derived from doing stuff that’s “horizontal”, that crosses components and teams and sort of binds the entire development effort together. With this in mind I approached my bosses at Sears and, after prolonged discussions, we came up with the title of Application Engineer: An application engineer, in Sears parlance at any rate, bridges the gap between R&D and IT (or rather, the support, deployment and administrative teams). Essentially, where R&D (and QA) ends, the app engineer’s role begins: the app engineer is directly responsible for the smooth operation of the production system. This means that the app engineer must not only be fully versed in the system architecture and inner workings, but must also be an active participant in defining it. Wherever there is an overlap between R&D and IT is where you will find the app engineer: front-end server farms, logging and profiling requirements, log aggregation and reporting, system monitoring (which suddenly not only includes health, but applicative counters that must be correctly specified and monitored), deployment and troubleshooting processes etc. Having been assigned this role for the past few months I’ve reached the conclusion that an app engineer is a cross between IT-oriented system architect and system administrator, walking a fine line between a developer and a system adminstrator. I certainly hope I don’t fall off!

Download ant-intellij-tasks-1.0-b1.zip A great but oft-ignored feature of Visual Studio 2005 and up is the inherent consolidation of an important developer tool: the build system. With a Visual Studio solution you can simply run MSBuild and you get accurate, automated builds. This is an invaluable capability: continuous integration is ridiculously easy to set up, as are nightly builds and automated deployment tools. Since I started working for Delver (now Sears) I’ve been switching back and forth between C# (2.0 and later 3.0) and Java 1.6, and though the ecosystems share many similarities there are also several glaring differences. The first of these differences is that, in the Java world, it is perfectly acceptable – even traditional – to maintain a dual project structure, one using the IDE (usually Eclipse or IntelliJ IDEA) and one using one of the build tools (commonly Ant or Maven). The build scripts need to be continuously synchronized with the project structure, and output parity between the two separate build systems is almost unheard-of. Because I had been a complete Java newbie when I started, I had never had the time to really sit down and set up a continuous integration server for our Java codebase, a mistake I did not intent to repeat when Sears took over. The first item on my agenda was to do away with the dual project structure; we originally used Eclipse, so I built a custom Ant script (my first, actually) around ant4eclipse and managed to come up with a semi-satisfactory solution. This also gave us invaluable insight when it was time to revisit our IDE choice; the lackluster project structure offered by Eclipse, along with firm positive comments on IntelliJ IDEA from several team members, tipped the balance and led us to switch to the alternative IDE, while also creating the necessity for a revamped build system can that work on top of the IntelliJ IDEA project structure. Out of necessity, a project was born. ant-intellij-tasks is the result of several months of all-night itch-scratching on my part. While not directly affiliated with the company, we’ve been dogfooding the project at Sears for over a month now, and while there are certainly rough edges it finally seems stable enough for release! From the project website: ant-intellij-tasks is a self-contained build system for IntelliJ IDEA projects based around Apache Ant. In essence, ant-intellij-tasks comprises three components: - An Ant task library that can extract and resolve the IntelliJ IDEA project and module files (.ipr and .iml respectively), and provides a set of tasks and conditions around the project structure;

- A common build script which provides the four major build targets for modules: clean, build, test and package (see the quickstart guide);

- A master build script which extends these targets to the entire project.

The build system is designed to be extensible (e.g. by adding targets), customizable (e.g. by overriding a target's behavior for a specific module) and self contained in that it's a drop-in solution that should not require any significant modifications to the code base. This project is fully open source (distributed under an Apache license) and hosted at Google Code. Please report any bugs or issues on the project issue tracker. ant-intellij-tasks makes use of, and redistributes, the ant-contrib task library.

In the previous installment I have first experimented with rolling the tubes in my headphone amp, and first introduced an external DAC into one of my audio setups. Replacing the Electro Harmonix 6922EH tubes with a pair of matched JAN-Sylavnia 7308 tubes bought me a significant improvement in soundstage and resolution, and adding a Musical Fidelity V-DAC into the mix resulted in even better resolution at the expense of reduced imaging: In the previous installment I have first experimented with rolling the tubes in my headphone amp, and first introduced an external DAC into one of my audio setups. Replacing the Electro Harmonix 6922EH tubes with a pair of matched JAN-Sylavnia 7308 tubes bought me a significant improvement in soundstage and resolution, and adding a Musical Fidelity V-DAC into the mix resulted in even better resolution at the expense of reduced imaging:

“… the V-DAC features significantly improved accuracy and resolution, and more and more often I’ve been rewinding tracks just to make sure that, yes, I wasn’t imagining, I really have never heard this or that detail before… In fact, the only disadvantage is in a certain change in the soundstage, as though the stereo separation grew just a littler wider than I’d like. Don’t get me wrong, the soundstage is huge and imaging is terrific, but it sometimes seems to be that sounds tend to cluster a little closer to the extremes of the soundstage than they should.” When that upgrade was concluded I was left with an unfulfilled sense of curiosity. The first tube upgrade was a huge success, and the new DAC added the detail I was missing with the original setup, but I was not entirely happy with the difference in soundstage. Along with the 7308 tubes I bought a pair of Mullard E88CC; from what I’ve read on the tube I predicted that it would improve the soundstage, a theory which immediately I put to the test.

Great success! The 7308 tubes were such a huge step up, I did not expect to be so well-rewarded the second time around. The soundstage not only “deflated” to more natural-sounding positioning, but it also deepened (i.e. became more three-dimensional). Instrument articulation has improved dramatically: buzzing of metallic strings can be heard distinctly on decent recordings, bass has deepened remarkably and the sense of air around instruments can be absolutely mind-boggling. Even the noise floor dropped a few decibels. The improvement was so pronounced I now have a renewed desire to test additional tube amps, such as the Little Dot Mk IV SE or DarkVoice 336 SE. I’ll certainly post my experiences if I manage to get my hands on one of these… Finally content with my primary setup (which I primarily use at work), I have turned my attention to the secondary setup at home. At that point the setup consisted of an onboard ALC889A codec, connected to an Aqua Mini-Head via a generic analog interconnect, this in turn connected to ‘03 Beyerdynamic DT880 cans. In my previous post I had failed to mention that I did test the ‘05 edition DT880s with the 7308 tubes and found the new edition to be a downgrade: slightly better midbass marred by a muddy soundstage, flat treble (without the sense of “air” I’ve begun to associate with tube-based amplification) and a generally degraded experience than with the older edition. At that point I had almost put my original setup up for sale in its entirety, but decided to hold on to it for a few more experiments before I let it go. I took the opportunity to test those cans with the upgraded Mullard tubes, but am sad to report that no new synergy is to be found in that direction, and throwing the V-DAC into the mix did not result in any improvement either.  As it happens, an opportunity presented itself to buy an upgraded Zhaolu D2.5 DAC for a very good price, and after arranging to loan it for a few days I’ve had the chance to try out some interesting new combinations. The Zhaolu (apparently pronounced chow-loo) D2.5 is a modular DAC that, at a cost of $215, is widely considered as one of the finest and most customizable value-priced DACs on the market. There are a lot of aftermarket upgrades available for the device, and it’s offered with a headphone amplifier module for an extra $55. The device is based off of a CS4398 chip, and my particular unit comes with upgraded National LM4562 opamps. The unit is extremely large (24cm x 30cm x 5.5cm – about the same surface area as the G&W amp!) and surprisingly heavy. Build quality is fairly mediocre: the markings on the front tend to easily wear off and the volume control produces an audible distortion when adjusted. From a usability perspective the unit is decent but does have a couple of minor annoyances, specifically the need to select the optical channel every time I turn it on and an annoying blinking “mute” indicator when there is no active signal from the computer. As it happens, an opportunity presented itself to buy an upgraded Zhaolu D2.5 DAC for a very good price, and after arranging to loan it for a few days I’ve had the chance to try out some interesting new combinations. The Zhaolu (apparently pronounced chow-loo) D2.5 is a modular DAC that, at a cost of $215, is widely considered as one of the finest and most customizable value-priced DACs on the market. There are a lot of aftermarket upgrades available for the device, and it’s offered with a headphone amplifier module for an extra $55. The device is based off of a CS4398 chip, and my particular unit comes with upgraded National LM4562 opamps. The unit is extremely large (24cm x 30cm x 5.5cm – about the same surface area as the G&W amp!) and surprisingly heavy. Build quality is fairly mediocre: the markings on the front tend to easily wear off and the volume control produces an audible distortion when adjusted. From a usability perspective the unit is decent but does have a couple of minor annoyances, specifically the need to select the optical channel every time I turn it on and an annoying blinking “mute” indicator when there is no active signal from the computer.

The D2.5 has only S/PDIF and TOSLINK inputs, and I did not experiment with the V-DAC’s optical input to draw a comparison, so this is not a direct apples to apples comparison; that said, I connected the D2.5 to my desktop via TOSLINK, connected it via the preamp output to the G&W amp and started with the ‘03 edition Beyerdynamic DT880 headphones. The initial impression was severely disappointing: while the resolution was incredible, the sound had lost all warmth and the soundstage had lost all depth. Just to put things in perspective: this sounded significantly worse than with a straight analogue connection from my computer at work. I figured that since the integrated headphone amp in the D2.5 was designed along with the DAC the combination would probably work better; unfortunately, this resulted in an even flatter soundstage, and imaging suffered as well.  At this point I had almost given up on the DAC, but decided to switch back to the G&W amp and try out the ‘05 edition DT880s. This resulted in a markedly improved sound in comparison with the V-DAC and analogue connection; the sound gained some warmth, the soundstage expanded significantly and imaging improved as well. This is still a far cry from the ‘03 edition DT880 and V-DAC combination, but I could see how the Zhaolu DAC would benefit the cans if it was paired with the right amp. At this point I had almost given up on the DAC, but decided to switch back to the G&W amp and try out the ‘05 edition DT880s. This resulted in a markedly improved sound in comparison with the V-DAC and analogue connection; the sound gained some warmth, the soundstage expanded significantly and imaging improved as well. This is still a far cry from the ‘03 edition DT880 and V-DAC combination, but I could see how the Zhaolu DAC would benefit the cans if it was paired with the right amp.

While a definite improvement over the previous anemic combination, the results were still not satisfying. I briefly tried running the D2.5 and V-DAC through the Aqua amp but was not overly impressed; either the Mini-Head is not a good match for the DT880s (it was supposedly designed around the Sennheiser HD600) or it simply isn’t a very good amp. As an aside, in both cases the ‘03 edition sounded better to my ears than the ‘05. Having tried nearly every combination of equipment at my disposal I nearly gave up at this point, but for the sake of completeness decided to try out the D2.5 and its integrated headphone amp with the ‘05 DT880s. To my surprise, this combination is a winner: amazingly revealing, detailed sound combined with robust imaging and a wide (albeit not as deep as I’d like) soundstage. While not as musical as my primary system (G&W amp, Mullard tubes, V-DAC), the Zhaolu D2.5 provides better resolution and an experience that’s nearly as engaging as that combination for less than a fourth of the cost. Value for the money indeed! If you can find one of these units, I definitely suggest giving it a try; just make sure to test it first as it’s apparently quite finicky with regards to its partners. For my part, the Zhaolu D2.5 has permanently replaced the Aqua Mini-Head amp in my home setup; this, in turn, went to my brother who is quite pleased with it driving ‘03 DT880s via a Creative X-Fi sound card. Visit my Flickr account for more photos

Apparently Java has quite a few known but practically undocumented issues with its handling of UNC paths under Windows. I’ve specifically encountered this bug albeit in a slightly different scenario: @Test

public void test() throws URISyntaxException {

final URI uri = new URI( "file://c:/temp/test/ham.and.eggs" );

new File( uri ); // IllegalArgumentException thrown here

} Apparently the two slashes after file: are misinterpreted as the authority part of the URI; this thread on StackOverflow may give a few starting points if want to delve deeper. It seems Java implements an older RFC for URIs which has slightly different tokenization rules.

At any rate, so far the only sensible solution I’ve managed to come with is to manually remove or add (depending on your tastes…) a slash:

/**

* Resolves the specified URI, and returns the file

* represented by the URI.

*

* @param uri The URI for which to return an absolute path.

* @return The {@link File} instance represented by the

* specified URI.

* @throws IllegalArgumentException <ul><li>The URI cannot

* be null.</li><li>Wrong URI scheme for path resolution;

* only file:// URIs are supported.</li></ul>

*/

public static File getFile( URI uri )

throws IllegalArgumentException {

if ( uri == null )

throw new IllegalArgumentException(

"The URI cannot be null." );

if ( !"file".equals( uri.getScheme() ) )

throw new IllegalArgumentException( "Wrong URI " +

"scheme for path resolution, expected \"file\" " +

"and got \"" + uri.getScheme() + "\"" );

// Workaround for the following bug:

// http://bugs.sun.com/bugdatabase/view_bug.do?bug_id=5086147

// Remove extra slashes after the scheme part.

if ( uri.getAuthority() != null )

try {

uri = new URI( uri.toString().replace(

"file://", "file:/" ) );

} catch ( URISyntaxException e ) {

throw new IllegalArgumentException( "The specified " +

"URI contains an authority, but could not be " +

"normalized.", e );

}

return new File( uri );

}

This is definitely a workaround, but according to newsgroup and forum posts these bugs have been around forever. If anyone has a more elegant solution I’d love to know.

Note: This is more or less a translation of two forum posts in an Israeli home theater website; if you can read Hebrew, you may be interested in the comments as well. First post, second post  Lately I have been bit by the audio bug again, and have decided to experiment with my headphone setup. This is the setup I use at work and listen to for hours at a time (often 5 or more hours a day), and which consists of a pair of 2003 model Beyerdynamic DT880 cans I bought on a previous trip to China, hooked up to a G&W T-2.6F amp I bought on the same trip after having spent an hour listening to various equipment combinations. Unlike products from other, internationally recognized brands with which I am familiar (Creek, Musical Fidelity etc.) this product was completely out of my comfort zone: a Chinese-made amplifier, which like many others I normally associate with cheap components and subpar build quality. Additionally it is rather large and bulky, and to top it off, it is a hybrid design based on two Electro Harmonix 6922EH preamplifier tubes and a solid state power section. Up until that point I had heard solid state equipment exclusively, with the exception of two loudspeaker demonstrations in which the amplification included tube components and was significantly more expensive than I could even consider. Since I had not known what to expect I could not detect the subtleties of tube sound, and had chosen this headphone/amp combination strictly on being the best I had heard during that visit. Lately I have been bit by the audio bug again, and have decided to experiment with my headphone setup. This is the setup I use at work and listen to for hours at a time (often 5 or more hours a day), and which consists of a pair of 2003 model Beyerdynamic DT880 cans I bought on a previous trip to China, hooked up to a G&W T-2.6F amp I bought on the same trip after having spent an hour listening to various equipment combinations. Unlike products from other, internationally recognized brands with which I am familiar (Creek, Musical Fidelity etc.) this product was completely out of my comfort zone: a Chinese-made amplifier, which like many others I normally associate with cheap components and subpar build quality. Additionally it is rather large and bulky, and to top it off, it is a hybrid design based on two Electro Harmonix 6922EH preamplifier tubes and a solid state power section. Up until that point I had heard solid state equipment exclusively, with the exception of two loudspeaker demonstrations in which the amplification included tube components and was significantly more expensive than I could even consider. Since I had not known what to expect I could not detect the subtleties of tube sound, and had chosen this headphone/amp combination strictly on being the best I had heard during that visit.

This setup was, in turn, hooked via a generic interconnect to my work computer with onboard HD audio, through which I play mostly lossless rips from my own CDs via Exact Audio Copy. It had faithfully served me for over three years, at which point I decided to do some research and was first exposed to the vast world of tube-based amplification and tube rolling. After several days of forum lurking and reading well into the night I had placed my first ever tube order at thetubestore.com. With the help of the shop representative, Jon, and general recommendations around the web, I had selected a matched pair of JAN-Sylvania 7308 tubes and yet another matched pair of Mullard E88CC tubes and placed my order. I did not have to wait very long, as the UPS delivery arrived amazingly fast (a single weekend, not too shabby for an international delivery!), and decided to spend a few weeks with each pair to be able to form an honest, educated opinion. I begun my experiments with what is, according to general consensus, the weaker tube: the JAN-Sylvania 7308. This setup was, in turn, hooked via a generic interconnect to my work computer with onboard HD audio, through which I play mostly lossless rips from my own CDs via Exact Audio Copy. It had faithfully served me for over three years, at which point I decided to do some research and was first exposed to the vast world of tube-based amplification and tube rolling. After several days of forum lurking and reading well into the night I had placed my first ever tube order at thetubestore.com. With the help of the shop representative, Jon, and general recommendations around the web, I had selected a matched pair of JAN-Sylvania 7308 tubes and yet another matched pair of Mullard E88CC tubes and placed my order. I did not have to wait very long, as the UPS delivery arrived amazingly fast (a single weekend, not too shabby for an international delivery!), and decided to spend a few weeks with each pair to be able to form an honest, educated opinion. I begun my experiments with what is, according to general consensus, the weaker tube: the JAN-Sylvania 7308.

What a shock! While I do not, for a moment, assert that similar or better sound cannot be found in solid state amplifiers, I certainly did not expect such a dramatic difference in sound quality. The soundstage, previously wide but shallow, simply exploded! It’s as though the sound instantly multiplied its volume tenfold or more; highs became wonderfully airy and distinct, and the resolution… let me put it this way: in every audio enthusiast’s life there are but few such moments of enlightenment, where you suddenly realize how much more is possible, and even attainable. The first time I’ve listened to the very same equipment with upgraded tubes provided me with one of those rare occasions, and from that point on I can never settle for less.  At the same time I had a second such revelation, albeit by accident: because of the physical layout of my desktop at work I was forced to place the amp further away from the computer, which necessitated a longer interconnect cable. I did not have one at hand and until I was done for the day I was left with no alternative but to use the iPod’s standard analog output. The iPod is generally considered to have very poor analog performance, which is why I was thoroughly surprised when, having brought a longer (and higher quality) interconnect from home and hooked the computer up, I found that the iPod actually sounded better. I recall when it was almost impossible to find a decent quality audio card for your computer, and assumed that contemporary solutions were at least adequate; indeed, the computer sound output was cleaner (better SNR) but also had significantly diminished dynamic range and volume. This led me to the conclusion that an audio card upgrade was in order. At the same time I had a second such revelation, albeit by accident: because of the physical layout of my desktop at work I was forced to place the amp further away from the computer, which necessitated a longer interconnect cable. I did not have one at hand and until I was done for the day I was left with no alternative but to use the iPod’s standard analog output. The iPod is generally considered to have very poor analog performance, which is why I was thoroughly surprised when, having brought a longer (and higher quality) interconnect from home and hooked the computer up, I found that the iPod actually sounded better. I recall when it was almost impossible to find a decent quality audio card for your computer, and assumed that contemporary solutions were at least adequate; indeed, the computer sound output was cleaner (better SNR) but also had significantly diminished dynamic range and volume. This led me to the conclusion that an audio card upgrade was in order.

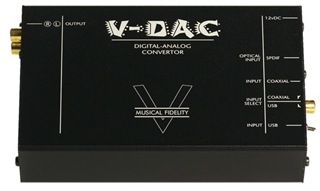

A little research into the subject brought me to the the conclusion that what I’m interested in is not, in fact, a computer audio card; what I want is an external DAC, or more specifically a USB DAC. Getting a computer to output even half-decent analog audio is pretty much a futile quest, and while hooking it up via coaxial/optical S/PDIF would certainly work there are some significant disadvantages, namely: digital (lossy) volume control, and jitter. With S/PDIF, both clock and data signals are encoded together on a single data line, and the click has to be regenerated. This introduces subtle timing inaccuracies, generally known as jitter, which in have an undesirable impact on digital-to-analog conversion (a more scientific explanation can be found here). Just how significant an impact is a subject of much controversy, but at a USB DAC has the theoretical advantage of significantly reduced jitter on the protocol level, as well as removing the question of the onboard S/PDIF encoder’s clock accuracy from the equation.  With the advice of fellow forum members I resolved to try one of the following DAC trio: Cambridge Audio DacMagic, Oritek OMZ DAC or Musical Fidelity V-DAC. Following a lead from a fellow forum member I eventually bought the V-DAC for a very good price from a head-fi.org forum member. The V-DAC is a 192KHz/24-bit upsampling DAC with optical, coaxial and USB inputs that has received high praise in the head-fi circles and is even available in Israel for a surprisingly reasonable price. With the advice of fellow forum members I resolved to try one of the following DAC trio: Cambridge Audio DacMagic, Oritek OMZ DAC or Musical Fidelity V-DAC. Following a lead from a fellow forum member I eventually bought the V-DAC for a very good price from a head-fi.org forum member. The V-DAC is a 192KHz/24-bit upsampling DAC with optical, coaxial and USB inputs that has received high praise in the head-fi circles and is even available in Israel for a surprisingly reasonable price.

I hooked the unit up with an unnamed but high quality silver interconnect, and after significant critical listening I can draw the following conclusions: compared with both iPod and onboard audio card (as well as an old Audigy 4 I had lying around) the V-DAC features significantly improved accuracy and resolution, and more and more often I’ve been rewinding tracks just to make sure that, yes, I wasn’t imagining, I really have never heard this or that detail before. This is exactly what I got into audio for in the first place! The bass is also much tighter, and in my opinion also extends further down than it ever did. In fact, the only disadvantage is in a certain change in the soundstage, as though the stereo separation grew just a littler wider than I’d like. Don’t get me wrong, the soundstage is huge and imaging is terrific, but it sometimes seems to be that sounds tend to cluster a little closer to the extremes of the soundstage than they should. I hooked the unit up with an unnamed but high quality silver interconnect, and after significant critical listening I can draw the following conclusions: compared with both iPod and onboard audio card (as well as an old Audigy 4 I had lying around) the V-DAC features significantly improved accuracy and resolution, and more and more often I’ve been rewinding tracks just to make sure that, yes, I wasn’t imagining, I really have never heard this or that detail before. This is exactly what I got into audio for in the first place! The bass is also much tighter, and in my opinion also extends further down than it ever did. In fact, the only disadvantage is in a certain change in the soundstage, as though the stereo separation grew just a littler wider than I’d like. Don’t get me wrong, the soundstage is huge and imaging is terrific, but it sometimes seems to be that sounds tend to cluster a little closer to the extremes of the soundstage than they should.

All in all I’m extremely happy with the upgrade, and luckily I still have some new equipment left to play with: the Mullard E88CC tubes, patiently awaiting my pleasure. Still, now that there’s such a significant difference in fidelity between my work and home setup (which consists of an Aqua Mini-Head amp and Beyerdynamic DT880 2005 edition cans) I feel compelled to experiment with new equipment. Whatever shall I try next, a new DAC? Another amp? Different cans, perhaps? The choices are endless, and that’s the beauty of it. See you on the next upgrade. Update: Some more pictures can be found here.

|